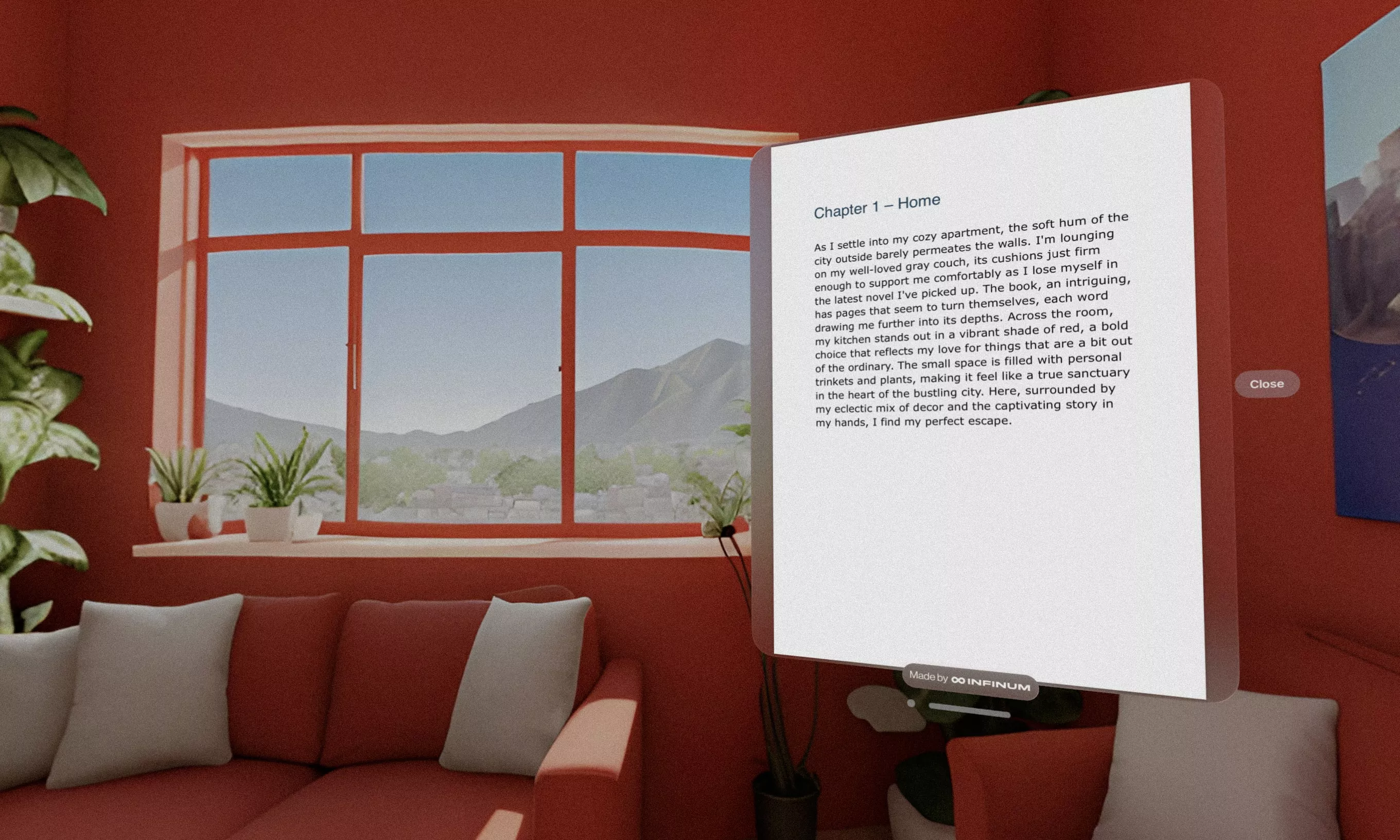

Tempted to test out spatial computing development, we built a proof of concept for the Apple Vision Pro – a reading app where the reader’s environment changes as the pages turn.

When Apple Vision Pro first landed at our office, everyone wanted to try it. You can watch all the reviews you want, but experiencing it firsthand is something completely different.

And as soon as we tried the headset, ideas started pouring into our minds. Hmm, what could we do with this?

Tempted to test out the promise of a spatial computing future, we decided to build an Apple Vision Pro proof of concept – a fully immersive book-reading experience where the reader’s surroundings change as the pages turn.

The extended reality playground opens up new possibilities for applications that were completely unimaginable before. This article uncovers our learnings from the the development process and presents some ideas for how you too can leverage spatial experiences on Apple Vision Pro to boost your digital product portfolio.

Our Apple Vision Pro proof of concept lets the reader enter the story

Anyone who has tried the Vision Pro knows how immersive it feels for the user. Setting out to build our PoC, this is the direction we wanted to explore further.

We also wanted to create something interesting that would provide us with learning opportunities and decided a reading app would be a perfect fit. Apple’s Books app is only available on the Vision Pro as a compatible app, which means that it can run on visionOS, but wasn’t originally built for it.

Just showing any immersive environment would be too easy. To unlock the full potential of the Vision Pro, we decided to up the ante and add AI to the mix.

Our Apple Vision Pro proof of concept utilizes generated images to change the user’s environment in line with the content they are reading. This makes for a truly immersive experience, one in which the reader feels they are a part of the story.

Components used in Vision Pro app development

Coming from working day to day on mobile iOS platforms and tools, visionOS is an upgraded and refined experience. The tools at our disposal in the development environment are similar and familiar, but the possibilities and ways of thinking about them are very different.

With mobile apps, we only need to think about one window and the content placed there. 3D elements you can interact with are very rare. On the other hand, the spatial OS supports multiple windows, calls for different design principles, and provides more interesting interaction possibilities.

Windows, Volumes, and Spaces

The main component of visionOS are windows (not Windows). Practically every spatial app starts with windows and can include multiple instances of them.

Similar to the windows you use on a Mac or other desktop computer, they can be resized and you can place them wherever you want in your space. The main difference, of course, is the ability to place them at any depth too.

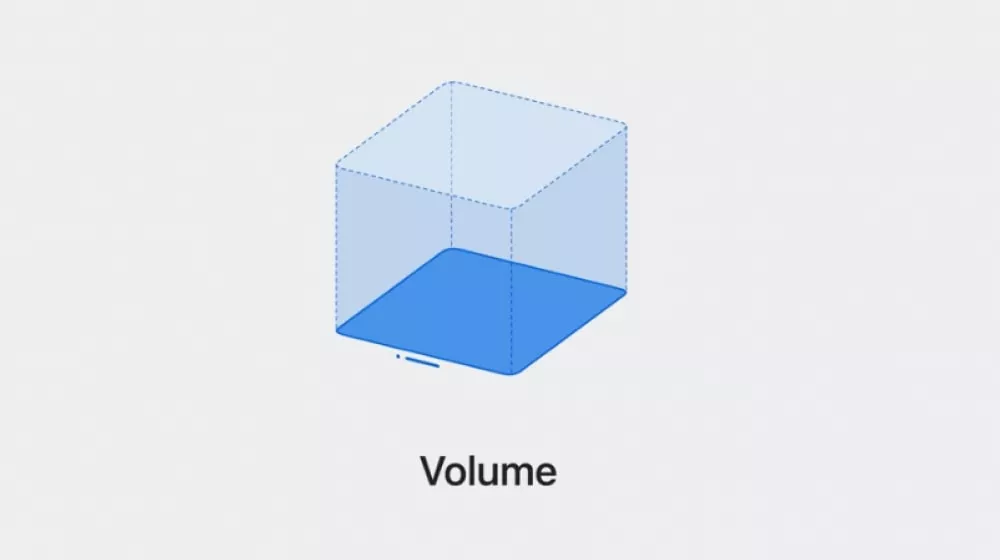

Moving up a level, we get to volumes – containers that allow apps to present 3D content. Similar to windows, they can be repositioned in space and resized as the user wishes.

Volumes are the first step toward elevating your app’s experience into spatial possibilities. With the power of RealityKit, we can populate them with 3D content and manage user interactions and gestures.

For example, an e-commerce store could have items displayed in a list that is contained in a window. The window can include 3D models of these items so that the users get a feeling of depth. But if we want to engage those customers fully, we can present a volume when they tap on a certain item. That would open a separate volume containing that 3D model, allowing the user to place it anywhere in the space.

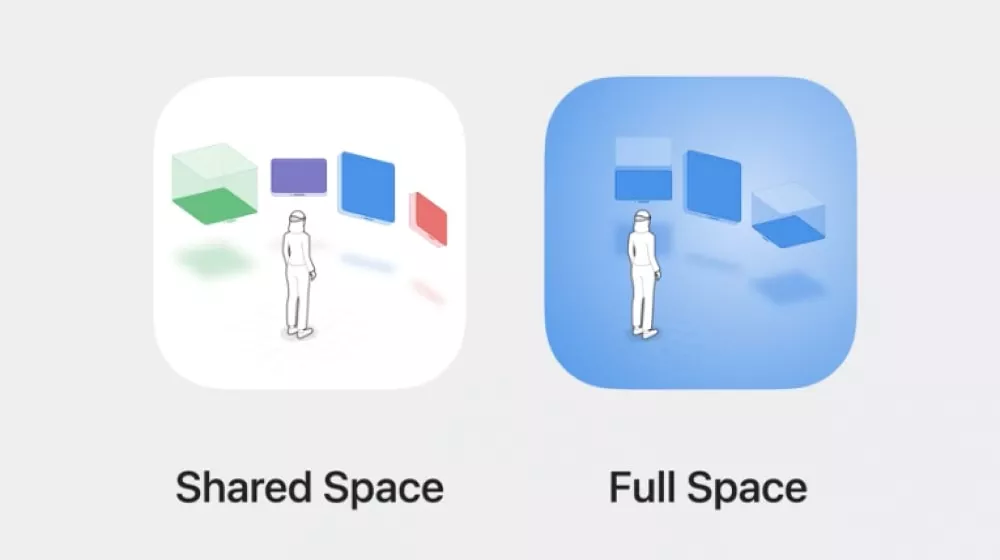

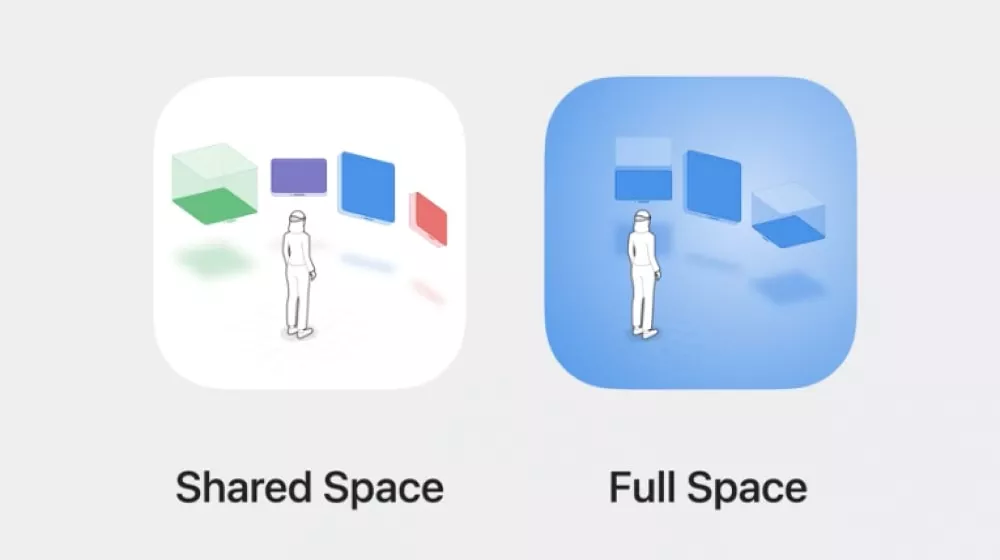

Finally, we have spaces. By default, every Vision Pro app opens in a shared space. Think of it as a space that is shared between apps, just like with a desktop experience.

If we want to fully immerse a user in a separate space where they won’t be able to interact with other apps, we can use full space. We can still adjust the level of immersion, give the user control over it, or fully immerse them in a scene. Using full space also allows us developers to use RealityKit and ARKit in conjunction, which unlocks the full potential of spatial computing.

Full spaces for the full spatial experience

A full space is not just a place to put apps; it offers a wide range of opportunities for enhancing the spatial experience. This is enabled by the new RealityView but also ARKit, which has been available since iOS 11 but now has some visionOS-exclusive APIs for building new experiences.

This is not the first time that Apple has built some of the key components earlier and then blended them together in a new product. It’s a smart tactic because when the new product is launched, the technologies are already mature enough to use and have wide capabilities ready to be applied.

ARKit in visionOS offers a set of sensing capabilities. These include:

Plane detection

We can detect surfaces in a person’s surroundings and use them to anchor content.

World tracking

Allows us to determine the position and orientation of the Vision Pro in relation to its surroundings and add use real-world anchors to place digital content.

Hand tracking

Using a person’s hand and finger positions as input for custom gestures and interactivity.

Scene reconstruction

We can build a mesh of the person’s physical surroundings and incorporate it into immersive spaces to support interactions.

Image tracking

Looking for known images in the person’s surroundings and using them as anchor points for custom content.

As we can see, when an app utilizes the full space, it opens many possibilities. Of course, all the APIs account for privacy, and user permission is needed to get some of this data.

Immersive audio

For an immersive experience, we also need immersive audio. Apple put a lot of thought into this, and again we can notice how they utilized some of the “old” technologies. For example, Spatial Audio was originally built for AirPods.

There are three types of audio to be used in the immersive space. By default, RealityKit sounds are spatial, ensuring that audio sources authentically exist within the user’s surroundings.

Spatial Audio

Spatial Audio enhances realism by allowing customization. We can emit sounds in all directions or project them in specific ways, adding an artistic touch to the auditory experience. This is particularly useful for seamlessly integrating audio with visual elements to enhance the overall immersion. The concept will be familiar to anyone who has used AirPods with spatial audio enabled.

Ambient Audio

Ambient Audio is tailored for multichannel files that capture the essence of an environment. Each channel is played from a fixed direction, preserving the integrity of the recorded environment. No additional reverb is added, which maintains a sound’s authenticity.

This type of audio is well-suited for scenarios where we want to achieve a complex auditory experience, such as recreating natural surroundings or intricate soundscapes.

Channel Audio

Channel audio takes a different approach by excluding spatial effects and sending audio files directly to the speakers. This makes it an ideal choice for background music not connected to any visual elements. Whether added through Reality Composer Pro or code, Channel Audio gives us flexibility in integrating and controlling audio within the immersive experience.

Our Apple Vision Pro proof of concept

Components for great immersion

To make our PoC app immersive, we needed to use the full space rather than the default shared space. It is the only way to create an environment around the user and fully immerse them in the experience.

Further, we used a window to show the book. The user can resize it however they want and position it anywhere in space. The only thing they cannot do in full space is use other apps in the environment, which is perfect for our use case – distraction-free reading.

Finally, to create that virtual environment around the user, we used a big sphere where we could add the material we wanted. In this case, it is a 360-degree image that would place a user into a scene. However, we wanted these images to match the content our user was reading, which required us to employ some generative AI power.

Powered by AI

The final piece of the puzzle for creating the engaging experience we envisioned was today’s trendiest technology – artificial intelligence.

To get 360-degree images that are always aligned with the content on the page, our backend team utilized an image generator. In the first step, OpenAI’s ChatGPT processes the book’s PDF, analyzing the text to detect where scene changes occur. Once this is determined, ChatGPT generates prompts tailored to each scene transition. These prompts are then used to generate images.

Further enhancements

With this proof of concept, we’ve explored the possibilities provided by spatial computing and successfully integrated AI into our creation. However, there’s always room for improvement, and in some following iterations, we could elevate our reading experience even more.

For example, we could add ambient sound, which would also be generated with the help of AI. Just like the images, we could base those sounds on the book’s content. We could also support more book formats by building a full-scale epub reader, which would give us even more customization opportunities.

The key to building great apps for visionOS is to use the specifics of the platform to your advantage, and an AI-driven experience on the Vision Pro might be the future we all eagerly await.

A spatial sea of opportunities in Vision Pro app development

As our PoC proves, visionOS opens up new directions for application development and supports the creation of all-new experiences.

One of the upsides of Apple’s ecosystem is the seamless compatibility across platforms. Therefore, if you want to venture into spatial computing, you can build a fully immersive experience from scratch or adapt and expand an existing iOS app.

Elevate current apps

If you have an existing product, you can still benefit from jumping into the visionOS platform. Current apps can be added to the visionOS in compatibility mode and run just like they do on iOS or iPad. Some Apple apps like Maps, Books, and others do just that.

You can also build full-scale visionOS apps that embrace the platform’s look and feel in a window experience like on a Mac. However, to fully unlock visionOS’s possibilities, you need to be thinking in spatial terms.

With the power of Reality View and ARKit, you can bring interactable 3D objects into play. Scene reconstruction allows you to anchor and place these objects anywhere in the physical space, and hand-tracking abilities allow you to create even more immersive and interesting interactions.

Make the most of spatial computing

Once we stop thinking within the constraints of our existing apps, the components available in visionOS allow us to build experiences that were hard to even imagine before. The Vision Pro is still in its early days, but when we consider its potential, we can come up with a number of possible innovative applications.

A responsive training environment

Training simulations for medical professionals or assembly technicians, where individuals can practice real-world scenarios in a close-to-real environment.

360-view immersions

Imagine virtual tours of exotic destinations, historical landmarks, or breathtaking landscapes. Users could get an immersive preview to plan their next adventure.

CAD prototyping & analysis

Engineers and designers could use Vision Pro to create, visualize, and analyze complex prototypes in a 3D environment, accelerating the product development cycle.

Interactive fitness

Previewing outdoor activities like hiking or running in augmented reality, allowing users to familiarize themselves with routes and terrain before starting their journey.

Virtual home/workspace design

Users could visualize and customize their living or working spaces in real time and experiment with furniture placement, decor options, and architectural modifications.

Concert experience enhancement

Bringing the electrifying atmosphere of live concerts to users’ homes with immersive visuals and spatial audio, similar to the experiences found in venues like Las Vegas Sphere.

Visual search & shopping

Visual search capabilities powered by extended reality can revolutionize how users discover and purchase products. They could explore them as 3D objects and see how they integrate into their physical environment.

Start a new chapter with Vision Pro app development

Developing our Apple Vision Pro proof of concept allowed us to explore the many opportunities offered by Apple’s new device. While this is only the product’s first iteration, it shows a path to future experiences.

Disruptive technologies with this type of power don’t come by very often and can be a great opportunity for early adopters. VisionOS allowed us to create something we could have only dreamed of a couple of years back – an experience elevated by new, spatial ways of interaction.

The Vision Pro holds a promise of amazing future experiences just waiting to be built. If you already have a vision for one of them, we can help you shape it up and make it a reality. And if you are interested in using AI in any type of digital product, check out our dedicated page for agentic AI development.