Internet of Things (IoT) solutions have been springing up like mushrooms after the rain as companies around the world race to bring more products to market as soon as possible. We’ve also been working on one of those solutions in collaboration with Signify, the Philips MasterConnect system.

Supporting Signify’s smart lighting system from the software side, we’ve developed mobile applications for Android and iOS and performed quality assurance for both.

Testing IoT apps can bring a specific set of challenges. Additionally, since Signify’s apps use Bluetooth as a core functionality, there are many devices the applications need to interact with and a number of different use cases.

This large-scale IoT project brought us face-to-face with many challenges. Here’s an account of how we approached and overcame them.

Getting to know the system

When working on any IoT project, especially a complex one like the Philips MasterConnect system, the first challenge is getting to know it. To test something thoroughly, we first need to have a deep understanding of how it’s supposed to work.

In our case, the system consists of various types of lights (usually packed into in-luminaire nodes so that the smart sensor doesn’t show), wireless Zigbee Green Power (ZGP) switches, and ZGP sensors. To set up a network, the MasterConnect app:

opens a Bluetooth Low Energy (BLE) connection to the first light

creates and exchanges BLE security credentials

sends a complex set of messages to configure the light and adds (commissions) it into a Zigbee group

The process is then repeated for each additional light added to the system. Once complete, the user can configure light behavior according to their specific needs.

Users can also expand the network’s complexity by adding ZGP switches and daylight or occupancy sensors. To do that, the MasterConnect app:

opens a ZGP network

instructs the user how to manually interact with the switches and sensors to add them to the network

detects new ZGP devices and sends a complex set of messages to bind them to the rest of the network

closes the ZGP network and informs the user about the result

By doing this, the user can have additional manual control of the lights or add another layer of smart automatic control.

As you may have noticed, the system has several complex layers that we testers need to know and understand. We are expected to grasp how Zigbee works and navigate the multitude of different supported products and their respective firmware capabilities.

Knowing how a mobile phone commissions one type of light is not enough – we need to know how it commissions every possible type of light.

Meanwhile, the user is completely unaware of the complexity of the process as it all happens under the hood.

If we want to handle this successfully, the best approach is to break it down to basics and focus on understanding each part of the system. For this case, we prepared an in-depth 100-page-long Project Handbook with all the nitty-gritty details we had to know. It added quite some time to the onboarding process but produced a better outcome in the end. We were able to detect possible problems early on, as early as the architecture phase.

It’s precisely for these reasons we have testers involved in each architecture-defining phase, both for mobile app implementation and on a system level. So, the lesson here is to get down to the very basics of system components and get involved in the development process as early as possible.

Diversifying the testing setup

Testing mobile apps that use BLE as a core functionality means you have to take into account two types of hardware:

The user’s mobile phone the app will be working on

The devices the app will connect to

For mobile phones, this includes both models and OS versions. You should also understand that some devices may look the same on paper, but their reliability might differ substantially in practice.

In our case, this was especially true for Android devices. We discovered early on that most Huawei mid-range or low-budget models can be very unreliable when establishing and maintaining a BLE connection. Compared to similar Samsung or Xiaomi models, Huawei devices consistently required us to restart their Bluetooth module by disabling/enabling it in the phone’s settings or reboot the entire phone. That’s also the reason why those devices are no longer supported.

However, you have to be careful to avoid the trap of testing only on top-of-the-line high-end models like the Samsung Ultra edition.

The key is to diversify your testing setup as much as possible and keep easily accessible notes about the devices’ performance with recommendations for adjusting the app behavior accordingly.

Another notable example is the BLE over-the-air (OTA) upgrade. We conducted iOS testing on an iPhone Pro that seemed fast and reliable. However, when we re-tested using an older iPhone like the iPhone SE – the thing broke down. To address this, we adjusted the implementation to work seamlessly and without interruption on any supported iPhone, whether it’s the latest Pro Max edition or your trusted old iPhone X.

Hardware management issues

As mentioned before, knowing the system means you have to understand the expected use cases, which devices the mobile app is expected to connect to, and what those devices’ capabilities are.

The MasterConnect solution brought to market several different types of lights, ZGP switches, and sensors, all of which rely on the Zigbee mesh network for communicating with each other. However, different devices have different capabilities, and they all need to work together to bring the expected system performance to the user. There were several subsets of challenges to overcome here:

Mocking the hardware for testing during early development.

The same mock units can also be used for automated product-level test cases later on. This way, you can start automating repetitive tests as soon as possible, and you’ll also need less real hardware to start testing.

Connecting and taking care of real hardware samples.

Since the MasterConnect app supports a network of up to 120 devices, it’s quite challenging to provide enough relevant hardware samples both for developers and testers.

This was especially true during hardcore COVID lockdowns when everyone was working remotely and had to explain to the rest of their household why the apartment was bright as the sun. But jokes aside, we tackled this challenge together with Signify by preparing small-scale setups for remote work and dedicating enough office space for a larger-scale setup.

Defining where product-level testing stops and system-level testing begins.

As these two depend heavily on each other, the border between system- and product-level testing is often blurry. It means you have to be very strict when defining who tests what. Working together with Signify, we agreed to test only mobile app behavior but also to help them with system-level testing questions they might have ad hoc.

Debugging a complex issue reproducible only on large projects.

This requires flexibility and quick alignment with other teams. Sometimes, a field trip to Eindhoven and testing on large-scale environments proved the best solution and a great opportunity to get to know the system in more detail. As a bonus, we got to meet the people we were working with face to face.

Shift left and usability testing

Another challenge I would like to address is the so-called shift-left approach. In a nutshell, it’s about starting testing as early as possible, and in our case, we started in the architectural phase, as mentioned before.

At Infinum, we strive to start testing as early as possible on every project, and we’ve carefully compiled our knowledge and experience in the Quality Assurance Handbook.

We need a high-level understanding of feature requirements since we often work closely with the design team. Sometimes, we accompany them for usability testing or review the data obtained there in collaboration. In many cases, this allows us to be the link between the architects designing the feature and the UI/UX designers who are working on preparing the UI proposal.

Usability testing data allows us to put ourselves in users’ shoes while testing Figma prototypes helps the entire team address potential issues or misunderstandings before they even arise. What this means in practice is that we don’t simply implement features on request but rather challenge certain aspects and provide constructive arguments about why something needs to change.

Defining the strategy for IoT app testing

Treading the fine line between maximum quality and delivering a feature to market quickly is no easy task. However, by getting involved in a project early on, we gain the knowledge and experience that helps us tread that line more easily.

That being said, proposing a coherent and workable strategy for a project involving multiple software testers and test automation engineers can be challenging.

Since the entire team works in an Agile setup using SAFe methodology, we usually prepare a high-level testing strategy every three months. First, we define the features we will be working on and the number of releases we’ll have. The testing strategy is then broken down into smaller chunks using JIRA’s Xray test management tool, where we prepare our test plan and multiple test executions.

Working with Bluetooth – automation testing

The sheer complexity of a large-scale IoT project often results in a large number of complex test cases. This, in turn, results in regression testing (and the whole release process) taking longer and longer with each new functionality added.

Since the modern market is very dynamic, you always want to reduce the time needed to release a new functionality while ensuring that the product quality remains consistent or increases.

A possible route for achieving this is test automation, and one of the biggest challenges of working on a project involving Bluetooth is figuring out how and which test cases to automate.

Utilizing a mock environment

Let’s start with how. Since we are doing product-level testing, we have to make sure the mobile app is working according to the system and product architects’ requirements.

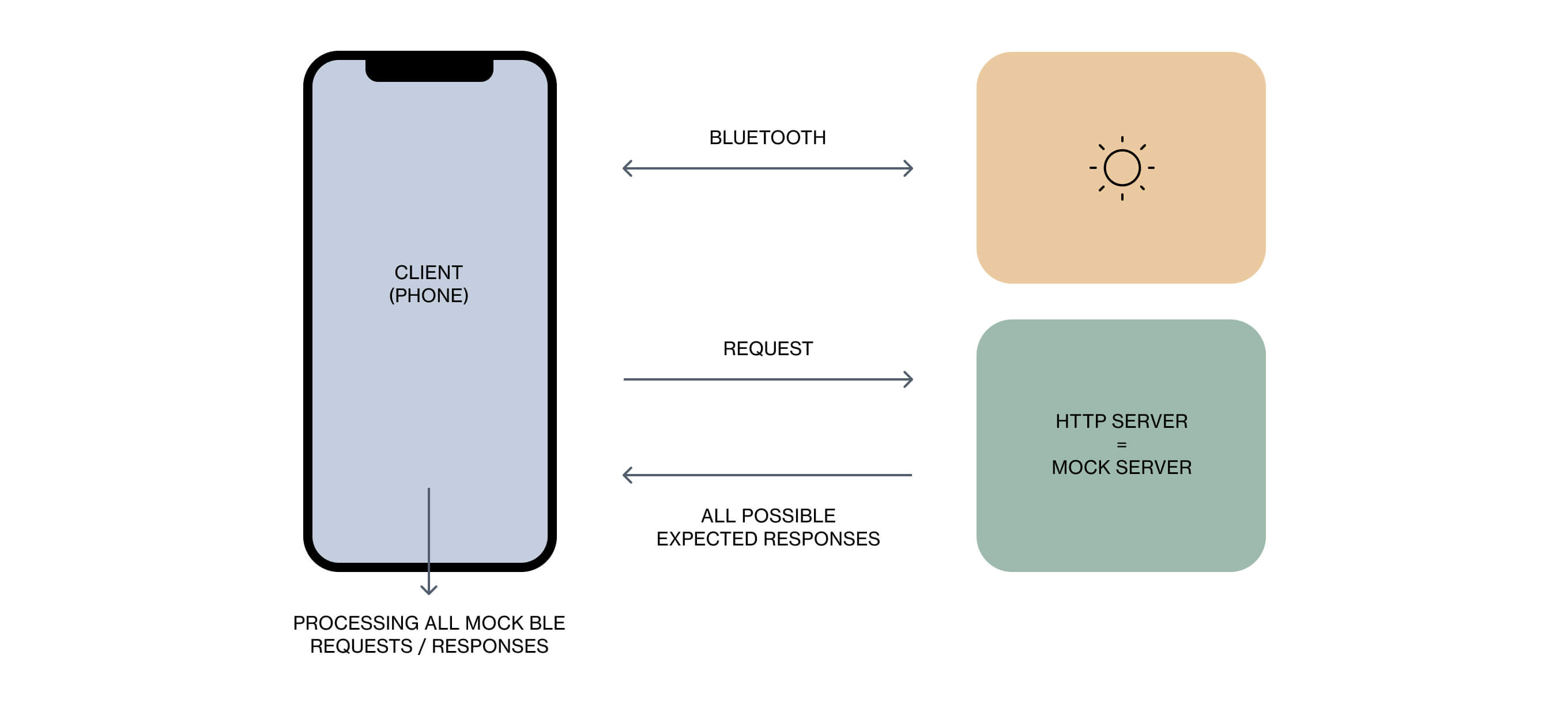

After careful consideration, we concluded that the best way to go about this was to create a mock environment (server). We would intercept the Bluetooth traffic there before it reached the actual Bluetooth layer on the mobile phone and returned the smart devices’ expected responses. This way, the developers could write down integration tests while we were able to write end-to-end (E2E) tests mimicking the actual hardware, thus protecting the real devices from possible unexpected behavior (negative scenarios were being tested separately).

To achieve this, we had to work closely with the developers to make sure all the features were supported on the mock server. The test cases were first executed manually on real hardware to ensure the implementation was correct. Once confirmed, that test case was automated, so there was no need to execute it manually during the next regression test cycle. This way, we were able to reduce the time needed to complete regression testing and lower the release cycle.

Prioritizing test cases

When choosing which test cases to automate, we had to rely on the mock server’s current maturity. We started with UI-related test cases as they proved to be easier to automate, which allowed us to tackle more complex BLE-related scenarios and research in the manual part of testing.

As the mock server’s complexity improved, we started automating increasingly complex BLE-related test cases so we could start testing new features instead of spending too much time on regression testing.

However, those tests rely solely on the mock server’s capabilities, so we had to find a delicate balance between enabling support for existing features and developing new ones. To help us determine which test cases to focus on, we took the data from previous regression test runs and extrapolated the most critical ones. Those critical test cases were prioritized and automated first while we dealt with the rest as time allowed.

When most of our critical test case backlog was automated, we shifted our focus to automating test cases that covered new features. This way, we ensured the end product was sufficiently covered with both manual and automated test cases.

Lighting the way for future testers

Working on an IoT project brings a special set of challenges and complexities. It is important to take the time to familiarize yourself with the intricacies of such a project and the needs of the end user. This requires close collaboration with development and design team members.

When testing on different mobile devices, you should not rely solely on the advertised hardware and software capabilities but instead test on multiple devices while noting their performance. Use that knowledge to devise a proper testing strategy. Revisit it often and adapt it when needed. Try to automate what you can so you can free up time to focus on more complex challenges.

Or, in the words of experts:

A project is like a road trip. Some projects are simple and routine, like driving to the store in broad daylight. But most projects worth doing are more like driving a truck off-road, in the mountains, at night. Those projects need headlights. As the tester, you light the way.

CEM KANER, JAMES BACH, AND BRET PETTICHORD

To find out what else we can do in the Internet of Things realm, check out our IoT solutions page.