Real-time technologies are becoming a critical aspect of modern web development. Today’s web applications largely require the implementation of real-time functionalities such as notification systems, file sharing systems, live dashboards, or chats. Clients expect constant feedback from any device at any time. If you ever dreamed of creating the next Snapchat and becoming a part of some Forbes-like list, this is a good place to start.

Intro to SignalR

SignalR is a free, open-source library backed by Microsoft for ASP.NET that enables and simplifies the use of real-time communication in an application. It’s suitable for use with apps that require high-frequency updates. SignalR supports WebSockets, server-sent events, and long polling for handling real-time communication while automatically choosing the best transport method between the server and the client.

SignalR works as an abstraction over a connection with two programming models: Hubs and Persistent Connections. Both models can instantly push content and information to the connected clients. However, only the Hubs model allows the server-side to call the client-side functions on connected clients. This is done via remote procedure calls (RPC), while automatically taking care of connection management.

Problems with scaling real-time applications

Let’s say you started working on your awesome idea. While you’re developing your revolutionary chat, sooner or later you’ll probably think about scaling it. All those dreams of a new Snapchat will soon vanish when you realize that you have no idea how to scale your app horizontally. Before you decide to ask your friend Dave who mentioned something about applying for a DevOps position, panic googling will hopefully have brought you to this life-saving article.

Scaling out or scaling horizontally is essential when designing your application. Moreover, scaling SignalR applications horizontally is a bit trickier than scaling the common REST APIs.

WebSocket, unlike HTTP, is a stateful communication protocol working over TCP. Meaning that, after a successful HTTP handshake, both parties agree, or if you prefer, shake hands and upgrade the connection to use the WebSocket protocol. The connection is then elevated to a full-duplex persistent connection which gives a way for the server to send data to pre-subscribed clients and thus manages to avoid polling or long polling.

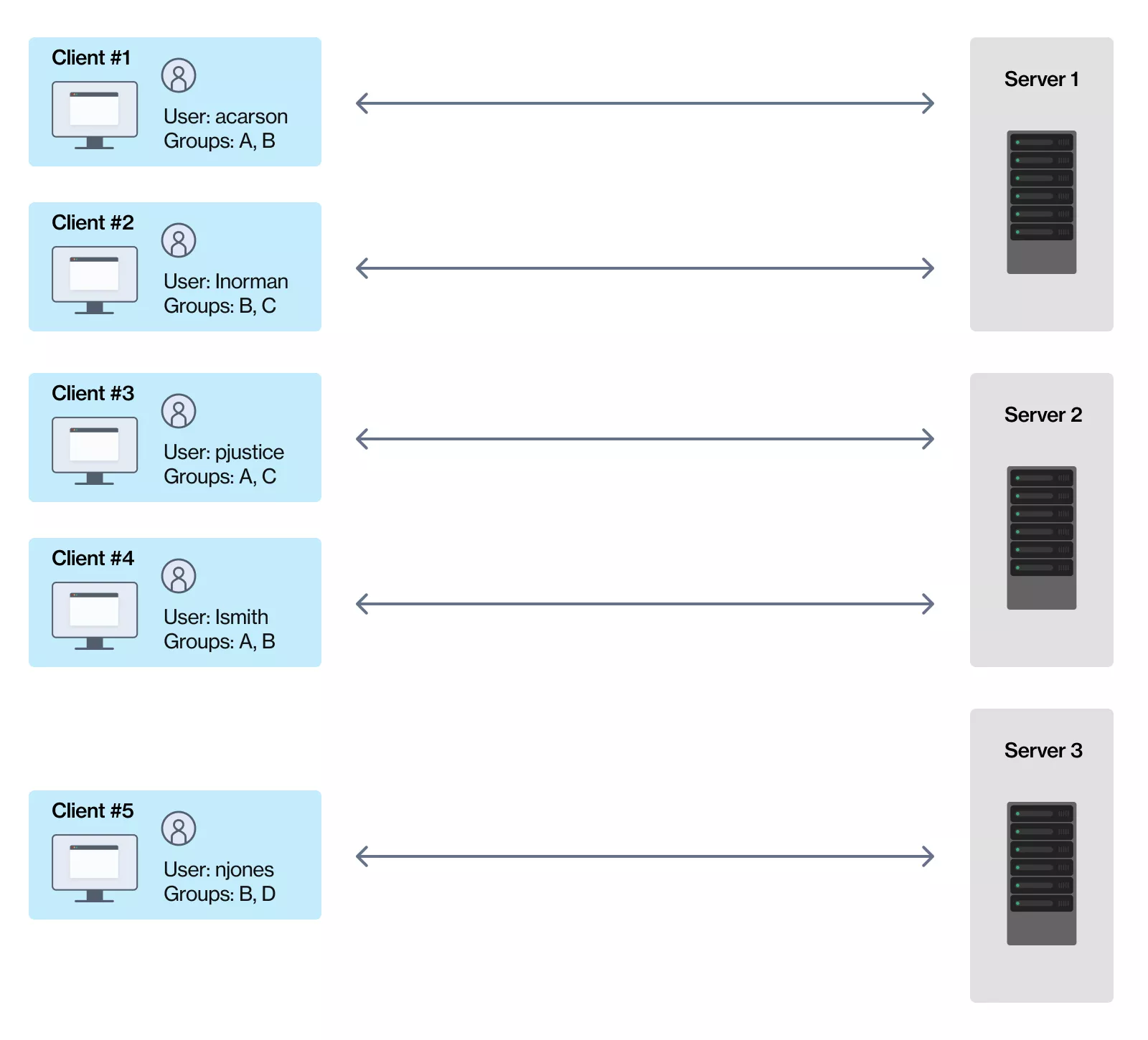

The issue with WebSockets being stateful is that every request a client sends needs to be handled by the same server process. This is of course perfectly fine if you are using only one server. However, if the app is running on multiple servers, which it usually is, the connections must be handled and taken care of. One way of solving this is using session persistence often called “sticky sessions” or even session affinity by some load balancers.

Sticky sessions, as the name suggests, stick all the client connections to the same server and maintain session information on a dedicated server. Still, this leads to a question – what if the server has issues, restarts, or reaches its maximum capacity? This would mean the user would lose their session data, which is not very good in practice.

Let’s imagine a perfect world where those server issues do not exist and the previously asked question never pops up. Despite that, another issue arises – those perfect auto-scaling servers are still egocentric and can only keep track of their client connections.

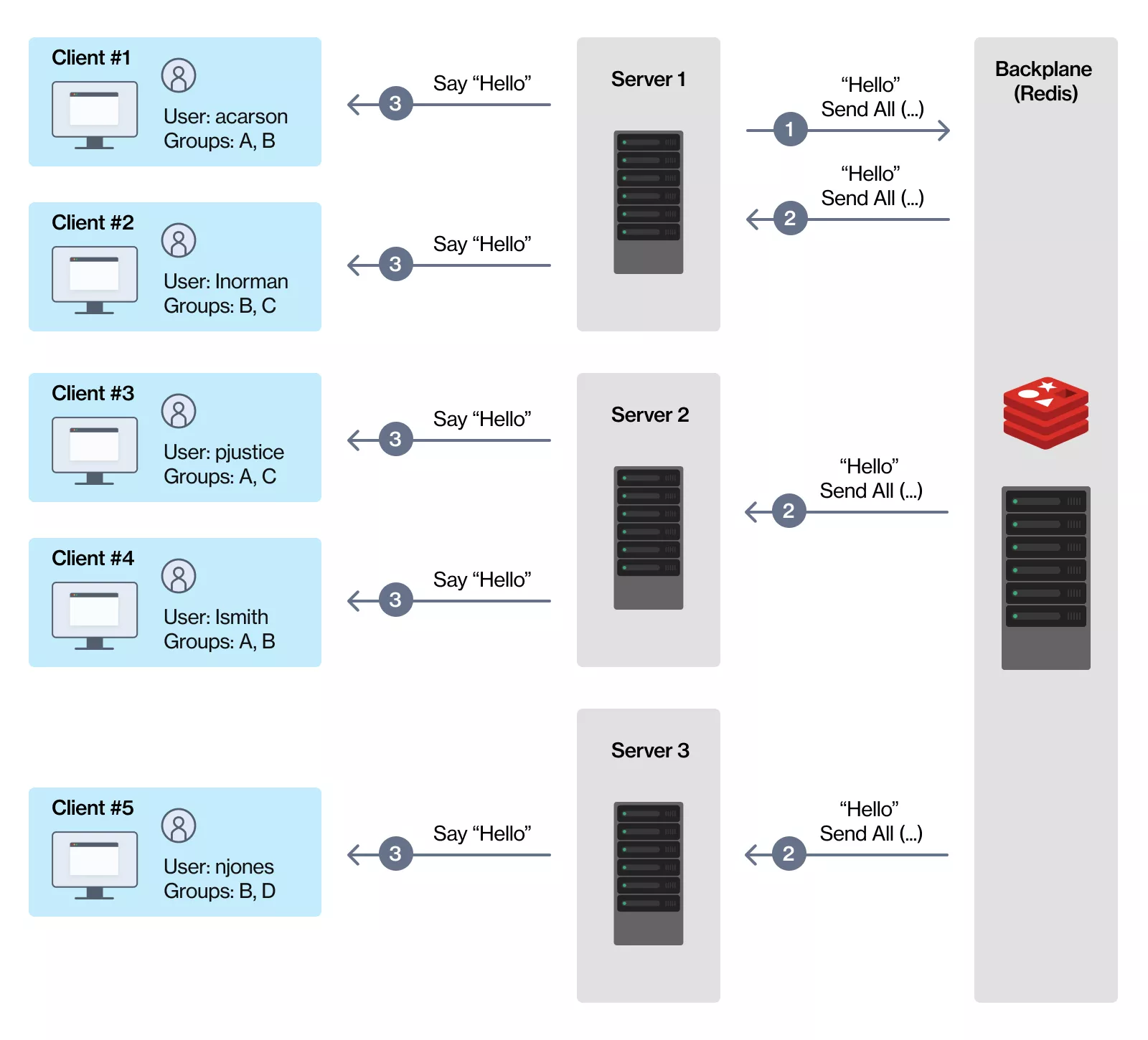

Servers being unaware of other client connections make the app unable to broadcast payload to all clients without first delivering it to each server. To handle those oblivious servers, one can implement a session store (like Redis) which can function as a backplane for every app server.

Scaling out with Redis backplane

Redis is an open-source in-memory data structure store, used as a distributed in-memory key-value database, that supports a messaging system with a publish/subscribe model. Azure offers its own Azure Cache for Redis service as a possibility to implement Redis as a backplane. There are a few other third-party SignalR backplane providers aside from Redis, such as NCache, Orleans, Rebus, and SQL server. It is advisable to run the backplane in the same data center as the application because of the network latencies that could degrade the performance.

The backplane is used for distribution of incoming client messages between subscribed app servers with its pub/sub feature. This means that all the client connection information will be passed to the backplane. The recommended approach for using the Redis backplane would be to use it only when the client communicates with the users on other servers, otherwise the backplane can become a bottleneck. Still, it is necessary to add sticky sessions if you are scaling with a backplane to prevent SignalR connections from switching servers.

To configure the Redis backplane for our SignalR app, it is necessary to install

Microsoft.AspNetCore.SignalR.StackExchangeRedis

NuGet package and then configure services in your Program.cs file:

builder.Services.AddSignalR().AddStackExchangeRedis(connectionString, options =>

{

options.Configuration = configuration["Redis:Configuration"];

});

The code above sets the connection string with the option to add your own custom configuration in the appsettings.json file. And that’s it! The backplane will know about the connected clients and their corresponding servers.

However, if the Redis server fails for some reason, the SignalR will throw exceptions which will indicate that the message will not be delivered. SignalR will automatically reconnect when the server comes back up but will not buffer messages. In other words, any message sent while the Redis server is down, will be lost. So it’s better to be careful about handling potential server issues.

Scaling out with Azure SignalR service

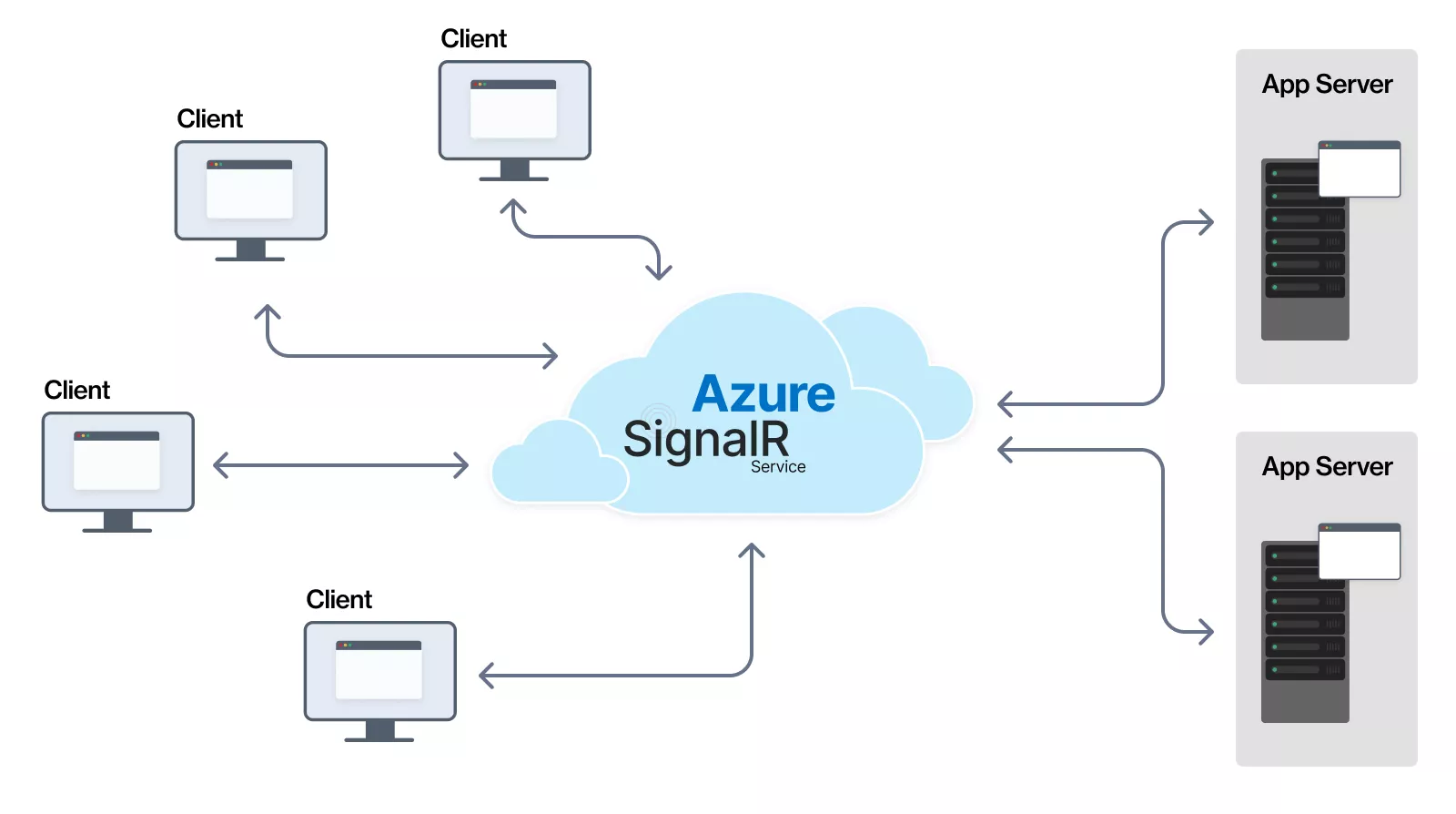

The Azure SignalR Service is a fully-managed service that simplifies the process of handling and adding real-time functionalities to applications. Your application will connect to the SignalR service instance via the service protocol and then be able to send messages to the connected clients. Service protocol is a protocol between Azure SignalR service and a server-side application to provide an abstract transport between a client and a server. The protocol uses WebSockets and MessagePack to proxy messages between service and the server.

SignalR service works as a proxy for real-time traffic in such a way that when a client initiates a connection to the server, the app redirects the client to connect to the service instead. The service will then manage all client connections and automatically route those messages with each server having only a small number of connections to the service. The SignalR service handles the clients to connect the WebSockets to itself and not directly to the app.

After creating an Azure SignalR service resource in Azure, all that your code needs is the following NuGet package:

Microsoft.Azure.SignalR

And then configure services to use the following service with your connection string:

builder.Services.AddSignalR().AddAzureSignalR(connectionString);

The setup is very similar to what we have seen for the Redis backplane, making it easy to configure. Also, there is no need to worry about sticky sessions on the Azure SignalR service, because the clients are redirected to the service immediately when connected, which then handles the rest. With SignalR service, you delegate the management of connections and messages to the service, which also allows you to scale without having to manage how SignalR is hosted.

Scaling, outsourced

Hopefully, it will now be easier for you to choose the best solution for your next real-time app. The Azure SignalR service is simple to configure and does most of the job of scaling and configuration for you. It can also be easily integrated with other Azure services. However, it comes with a price.

Azure offers three tiers, Free, Standard, and Premium, currently still in public preview. The free tier is basically intended for testing and development purposes and allows up to 20 concurrent connections per unit with 20,000 messages per day. The standard tier supports up to 1000 concurrent connections per unit with a maximum of 100 units, at a price of around 50 euro per month, which is reasonable.

There have also been some requests to add a consumption tier for load-based auto-scaling, which would be cool. However, if you decide to host your app in your own environment, you will need to use a backplane which will need to handle the scales and client connections with sticky sessions.

When you’ve mastered the SignalR implementation, you can integrate it with Azure functions. We have just the right article to get you started: Creating Azure Functions with .NET 6