Your RAG system works great until it doesn’t. As AI workloads scale, cloud-native tools begin to show cracks in governance, versioning, and observability. We explore how Databricks fills these gaps without replacing your existing AWS or Azure infrastructure.

Most teams already run reliable AI workloads on AWS or Azure. These platforms come with mature services that power modern production systems. Azure OpenAI, Cognitive Search, Blob Storage, AWS Bedrock, OpenSearch, and S3 all support high-quality RAG architectures and handle identity, networking, scaling, and operational reliability with ease.

But as AI systems grow, technical demands increase, data volumes expand, new document sources emerge, multiple teams work with the same information, and models evolve more frequently. That’s when cracks start to show. Cloud-native tools, built primarily for storage, compute, and serving, struggle to keep up. They lack unified governance, lineage tracking, and transformation pipelines needed to maintain consistency across growing AI workloads.

The challenge then shifts from building a functional RAG system to orchestrating a governed data foundation that can evolve without breaking.

At Infinum, we use Databricks to future-proof our clients’ AI architecture. We’ll walk you through its core capabilities, brick by brick, to show you how they work together to help you scale your cloud AI with confidence.

Unity Catalog: one layer to rule them all (your data, models, and vectors)

Unity Catalog is the central governance and metadata layer of the Databricks platform. It brings data, models, vector indexes, and functions under a single, consistent structure, so everything is defined, tracked, and secured in one place. This means simplified permission management and the elimination of fragmentation caused by different services each maintaining their own access rules.

Unity Catalog also automatically captures lineage, making it easy to trace how data flows through each stage of your AI pipeline, from ingestion to preprocessing, embedding, retrieval, and inference.

The result is a unified and predictable governance model that reduces complexity and supports reliable AI development across teams and cloud environments.

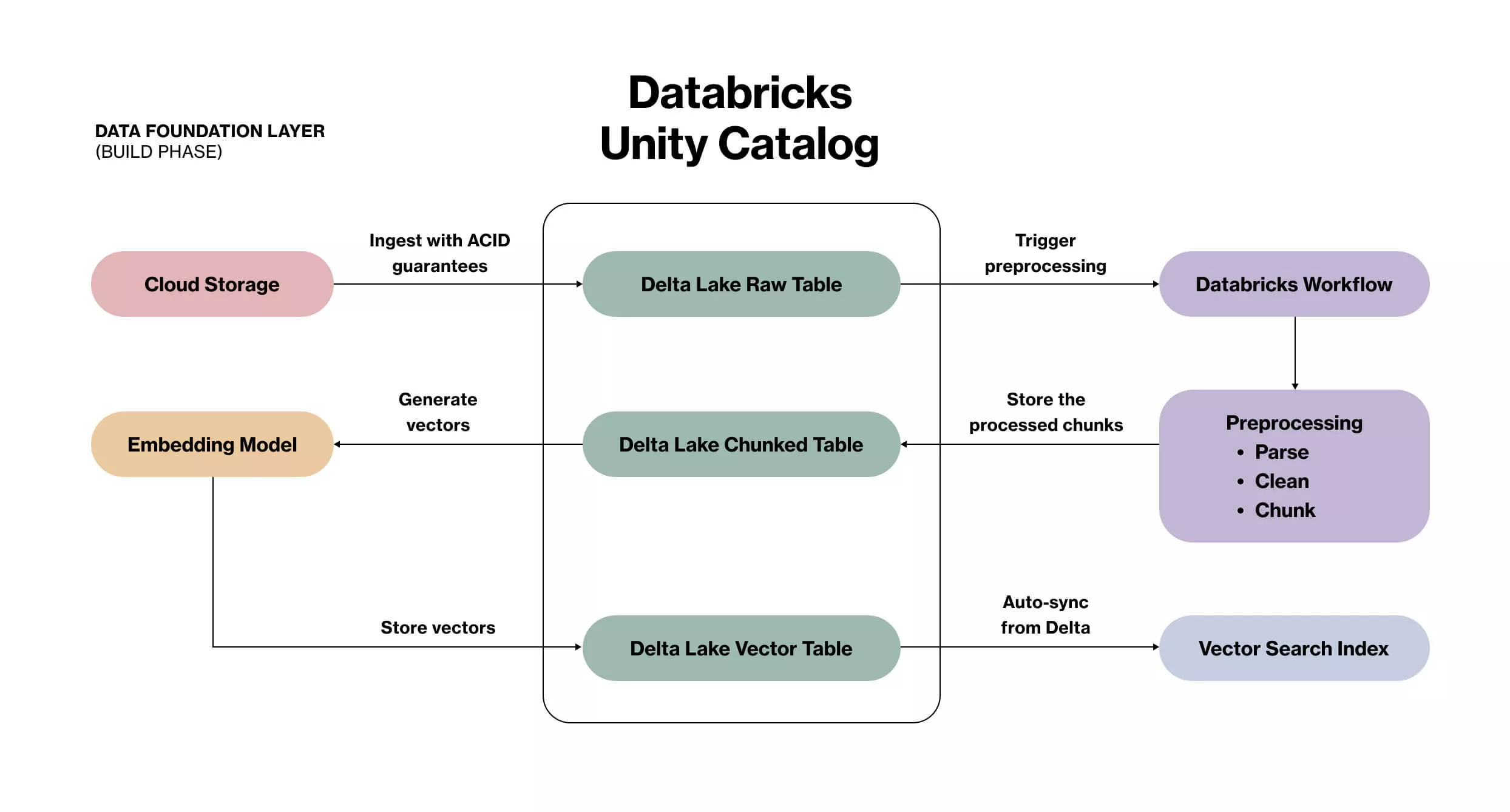

From unversioned storage to reproducible data with Delta Lake

With governance handled by Unity Catalog, the next layer to stabilize is storage itself. RAG systems thrive on structure and stability. But in practice, documents change frequently, models are retrained, and embeddings are regenerated. Without versioning and transactional integrity, it’s hard to explain model behavior or validate changes.

Delta Lake solves this challenge by layering ACID guarantees, schema enforcement, and time travel on top of cloud storage. Each Delta table becomes a versioned source of truth for both structured data from databases and unstructured data like PDFs and HTML. Ingestion becomes predictable instead of brittle. Teams can replay experiments without guessing which files existed at a given point in time. Even unstructured content can be governed just like structured tables, using managed volumes.

For teams prioritizing reproducibility and transparency, Delta Lake adds the versioning and transactional guarantees that object storage alone cannot provide.

Why retrieval belongs next to your data

With stable, versioned data in place, the next challenge is fast, reliable retrieval. Some engineering teams choose to complement their existing retrieval stack with Databricks Vector Search, especially when co-locating retrieval with the underlying data provides a performance or governance advantage. Integrating retrieval into the lakehouse platform offers several benefits:

- Synchronized indexes: Vector indexes stay in sync with the Delta tables that feed them.

- Automatic embedding updates: Embeddings can be configured to refresh automatically when source documents change.

- Lower latency: Retrieval queries run in the same compute environment as the data, reducing round-trip times and response times.

- Consistent governance: Indexes inherit permissions, lineage, and catalog rules, keeping access control and tracking consistent.

- Easier evaluation workflows: Co-located retrieval is ideal for comparing embedding models or running offline simulations to detect drift.

Unity Catalog handles governance, Delta Lake tracks every version from raw files to embeddings, and Databricks Vector Search continuously syncs with your data as it changes.

For teams focused on performance, governance, and evaluation, this level of integration adds speed and structure to otherwise complex retrieval pipelines.

Keep your models where your data is

Getting data and retrieval right is only part of the equation. Now’s the time to plug in the models.

Databricks Model Serving can help you deploy open-source foundation models, fine-tune custom variants, or run embedding models directly alongside their data, without bolting on separate infrastructure. Whether you’re working with large language models for generative AI or specialized embedding models for your RAG application, everything remains connected through Unity Catalog.

You can track the entire lifecycle of a model from initial training to production deployment. This enables a multi-model strategy, allowing you to select the best tools for each use case without introducing operational complexity.

No more duct-taping your AI pipelines together

Modern retrieval-augmented generation workflows require more than just storage and compute. They need orchestration, monitoring, and continuous improvement loops. Databricks provides integrated tooling for the entire RAG architecture:

- AI Playground: Quickly prototype and test different foundation models and prompts in an interactive environment. Experiment with how generative AI models respond using context from your data.

- Mosaic AI Agent Framework: Build intelligent agents that go beyond simple Q&A. These agents can perform complex, multi-step tasks by querying structured data, retrieving documents from vector stores, and synthesizing answers.

- Databricks Workflows: Long-lived pipelines that ingest documents, clean them, segment them, embed them, index them, and validate them, all within the lakehouse. Keeping data-intensive steps in one place eliminates cross-service coordination overhead.

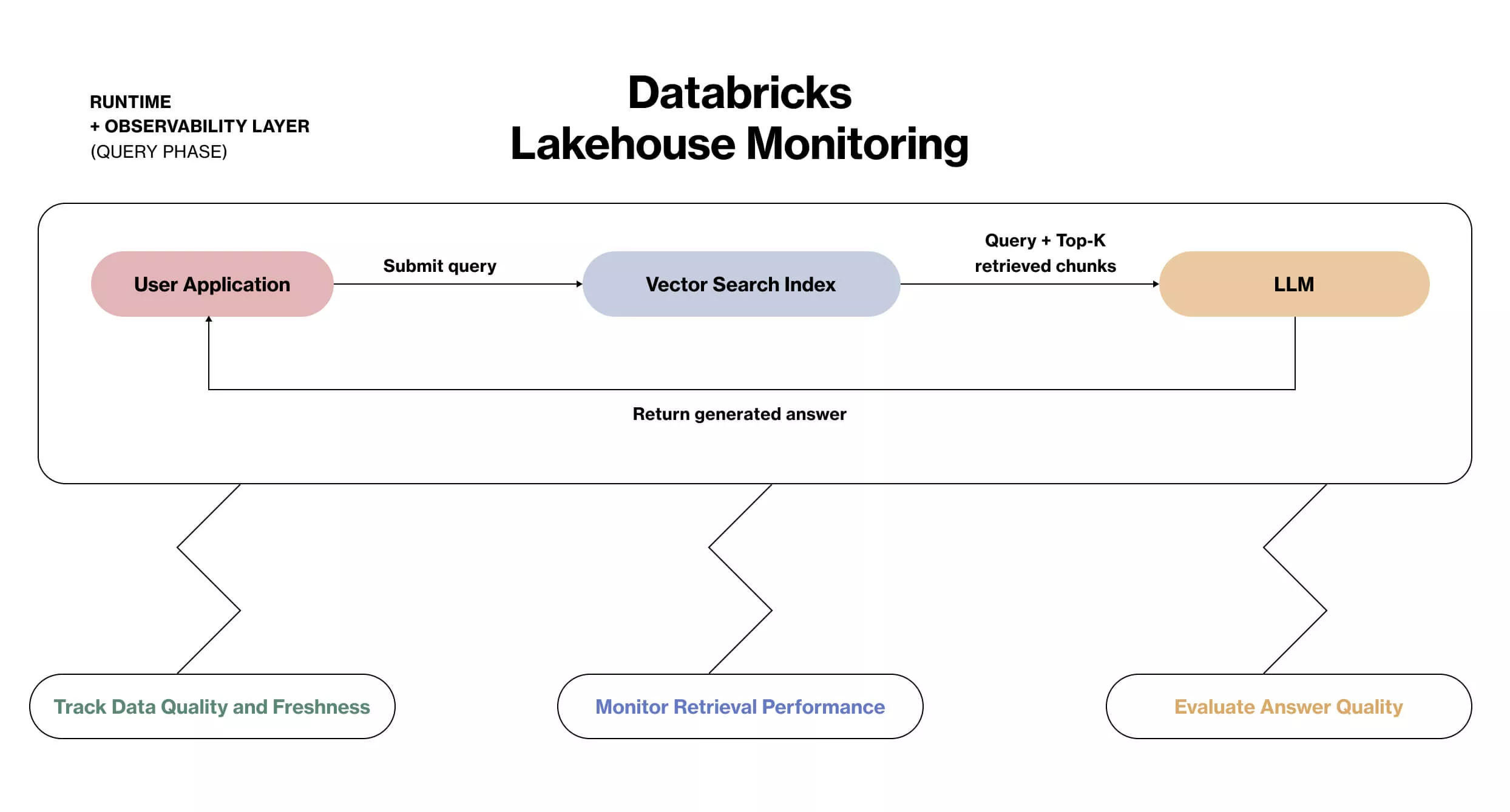

You can’t improve your system if you can’t observe it

As RAG systems mature, observability becomes just as critical as modeling itself. Retrieval performance shifts gradually. Embeddings drift as data evolves. Large language model answers change with new versions.

Lakehouse Monitoring lets you track everything from data quality to model behavior, all in one place. Instead of piecing together logs across disconnected services, you get a single, consolidated view of AI behavior in production.

A user query is enriched with relevant context from Vector Search, answered by a large language model, and continuously evaluated through Lakehouse Monitoring to ensure data quality, retrieval relevance, and response reliability.

A question every AI team should ask

If your AI workload doubled in size tomorrow, would your current data and governance structures scale with the same confidence as your application layer?

If the answer isn’t a clear yes, it might be time to lay a stronger foundation with Databricks.

Introducing Databricks into an existing environment is not a platform replacement. It is an architectural enhancement that consolidates governance, data reliability, model lifecycle management, and observability.

The underlying cloud continues to operate application and networking layers, while Databricks provides the durable, governed data foundation needed for long-term AI operations. With Databricks capturing ~17% of the data warehouse market as of November 2025, its role in enterprise AI infrastructure continues to grow.

If you’re ready to accelerate your RAG architecture or take the next leap in your AI platform, our team can help you build a modern, scalable foundation designed for long-term success.