Last year, I worked on a project in which I needed to parse and handle large .xlsx files. Some of those files had more than 200K rows. I was looking for a gem that could efficiently do the job.

I wanted a simple solution that would just iterate over each row, parse the contents of the row and save data in database tables.

Popular gems in that time were Roo and Spreadsheet. I also found a simple gem called Simple xlsx reader that was easy to use and had everything I needed. Except one thing.

When I called SimpleXlsxReader.open(file_path), memory consumption would go to 2GB and it would stay that way until the garbage collector cleaned it.

What I needed was a library that would read Excel rows in streams. At that time, none of the mentioned gems had that ability. I found a gem called Creek that does only that – stream parsing large excel files. It is simple and works well. Memory consumption while parsing these files was now under 200 MB, which is acceptable. In the meantime, Roo was updated and now it has the same ability.

Also, to reduce the number of SQL queries, I implemented saving data in batches – 1000 rows in 1 query. So, my method reads a thousand rows, makes an array of ActiveRecord objects and then saves them at once. I used the gem called ActiveRecord Import for this purpose.

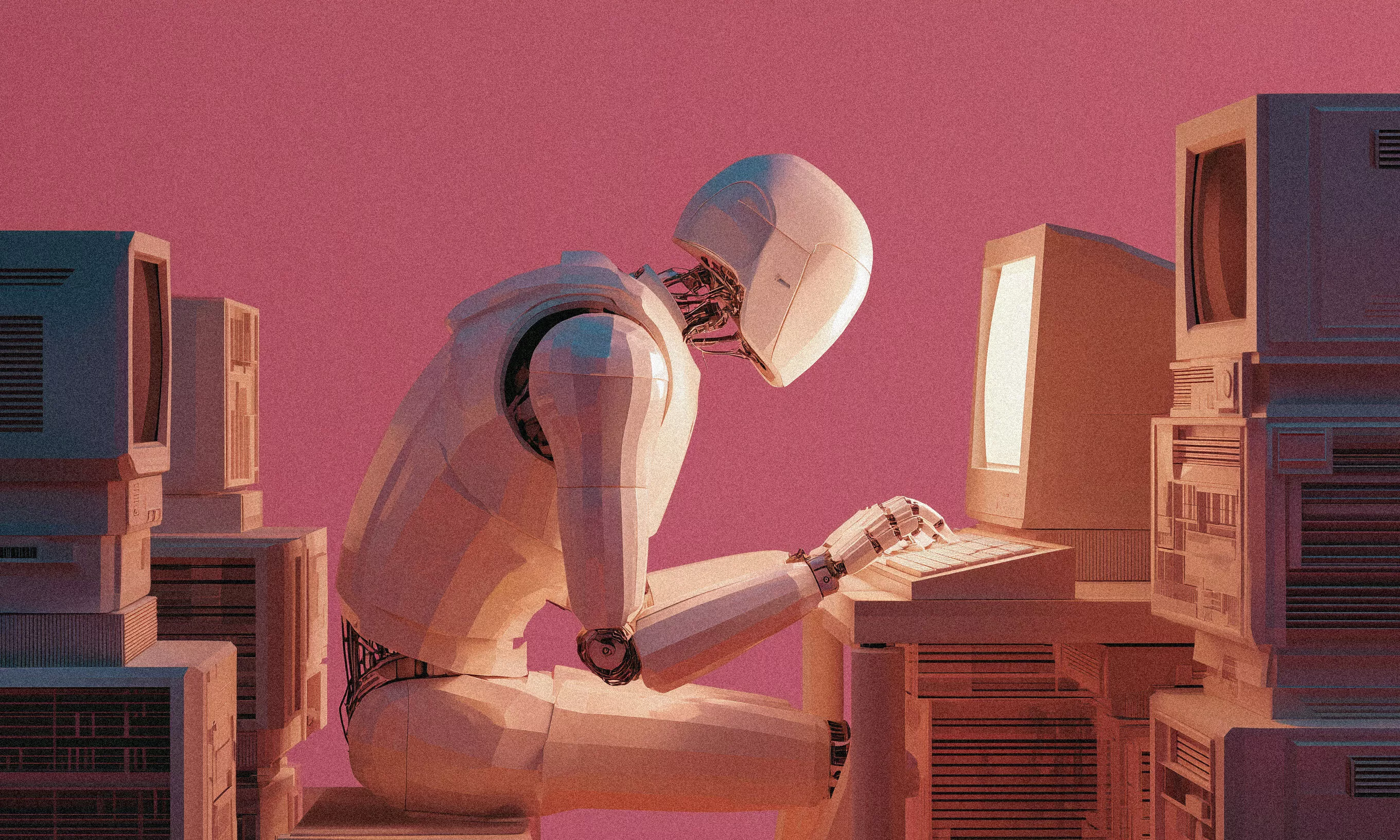

class ExcelDataParser

def initialize(file_path)

@file_path = file_path

@records = []

@counter = 1

end

BATCH_IMPORT_SIZE = 1000

def call

rows.each do |row|

increment_counter

records << build_new_record(row)

import_records if reached_batch_import_size? || reached_end_of_file?

end

end

private

attr_reader :file_path, :records

attr_accessor :counter

def book

@book ||= Creek::Book.new(file_path)

end

# in this example, we assume that the

# content is in the first Excel sheet

def rows

@rows ||= book.sheets.first.rows

end

def increment_counter

self.counter += 1

end

def row_count

@row_count ||= rows.count

end

def build_new_record(row)

# only build a new record without saving it

RecordModel.new(...)

end

def import_records

# save multiple records using activerecord-import gem

RecordModel.import(records)

# clear records array

records.clear

end

def reached_batch_import_size?

(counter % BATCH_IMPORT_SIZE).zero?

end

def reached_end_of_file?

counter == row_count

end

end

Finally, this task is being executed asynchronously in a background job since it’s too heavy to handle in the web process.