Knowing whether users keep coming back is crucial to the long-term success of any digital product. Help your clients make data-driven decisions by crafting an effective customer retention dashboard.

We often hear about companies collecting our data while we use their products. However, we rarely hear about what can be done with that data.

First of all, user data can inform us about the number of users our product has. But more importantly, it will tell us how well we retain those users on our site or using our service.

Knowing whether users keep coming back is crucial to the long-term success of any digital product. This article will show you one way to retrieve and visualize your customer retention data with the help of a customer retention dashboard.

What is a customer retention dashboard?

A customer retention dashboard is a dashboard that shows how successfully new users are retained on a site or service. Customer retention is an important product success metric that shows the staying power of a product.

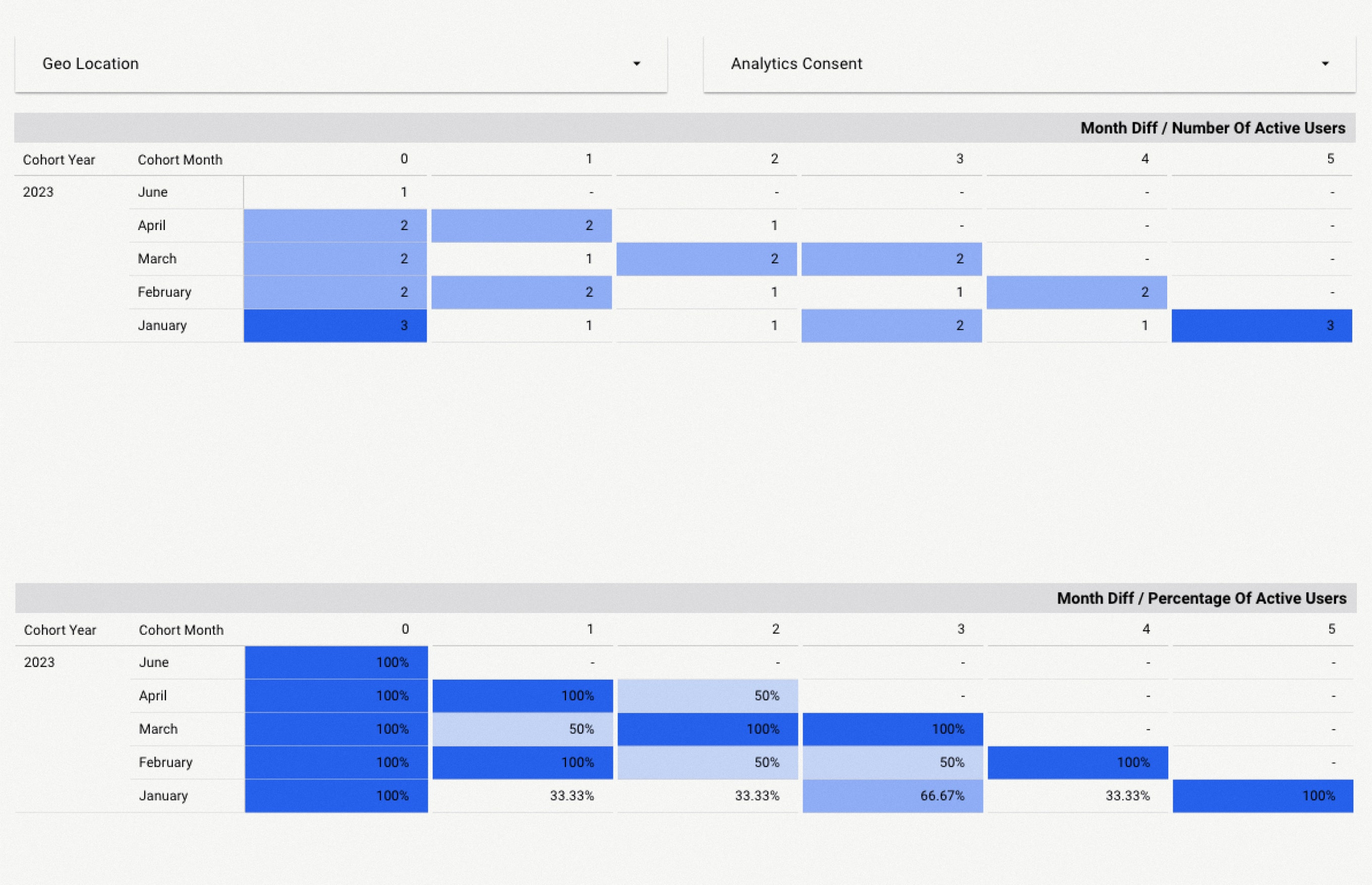

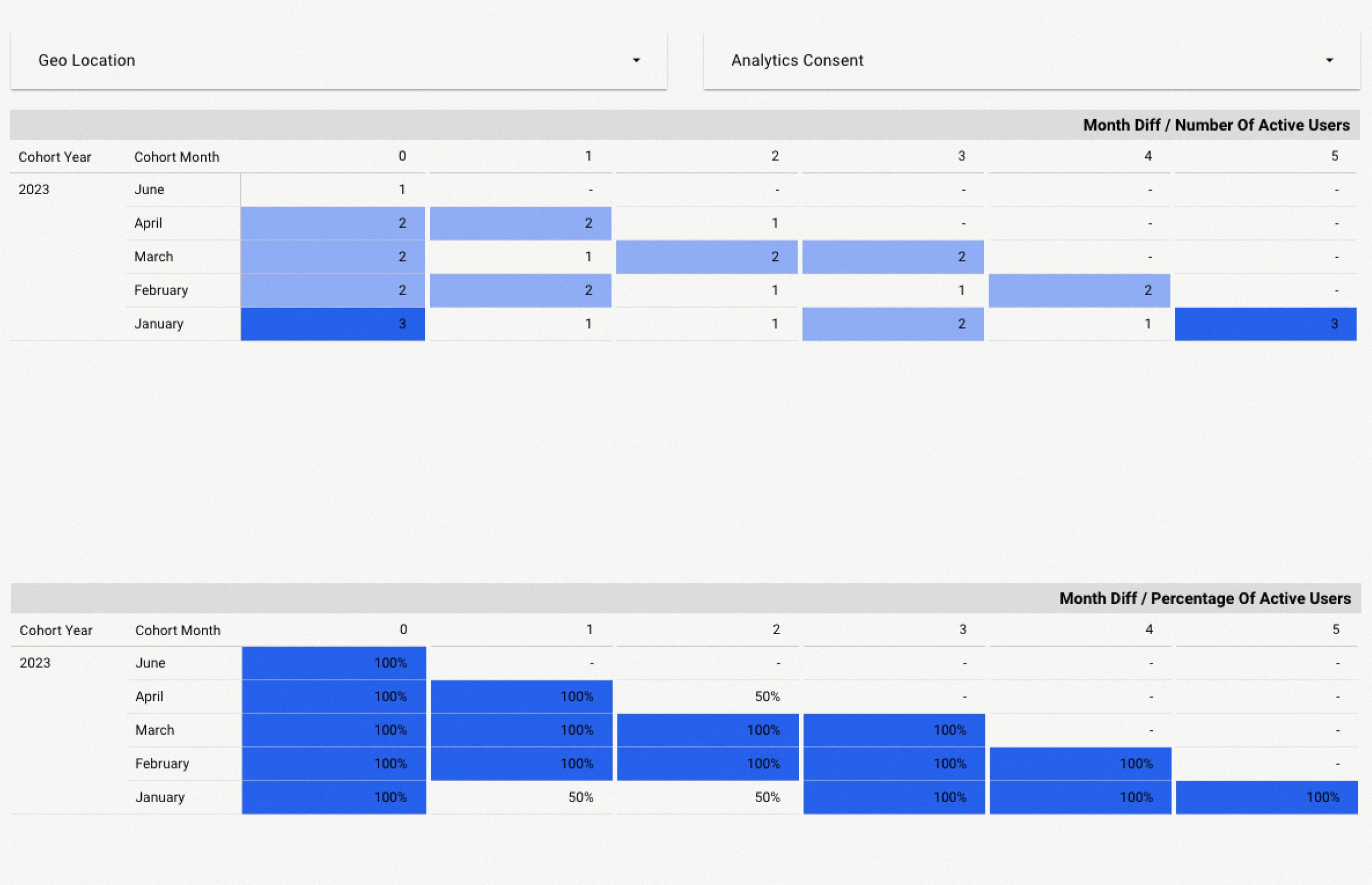

Let’s say in January we gained 100 new users. A customer retention dashboard will tell us how many users out of those 100 are still active in February, March, and the following months (see the image below). This can be very useful when we want to know if we are actually meeting users’ needs and how active they are.

If we manage to attract new users but lose many of them within a month, then the question is: are we actually meeting their needs, and are they really satisfied with our product?

It’s important to note that the customer retention dashboard doesn’t show us where issues affecting customer retention might lie, but it does show us how engaged users are. If we have a low retention rate, then we need to conduct various analyses to find out why users are not engaged with our product.

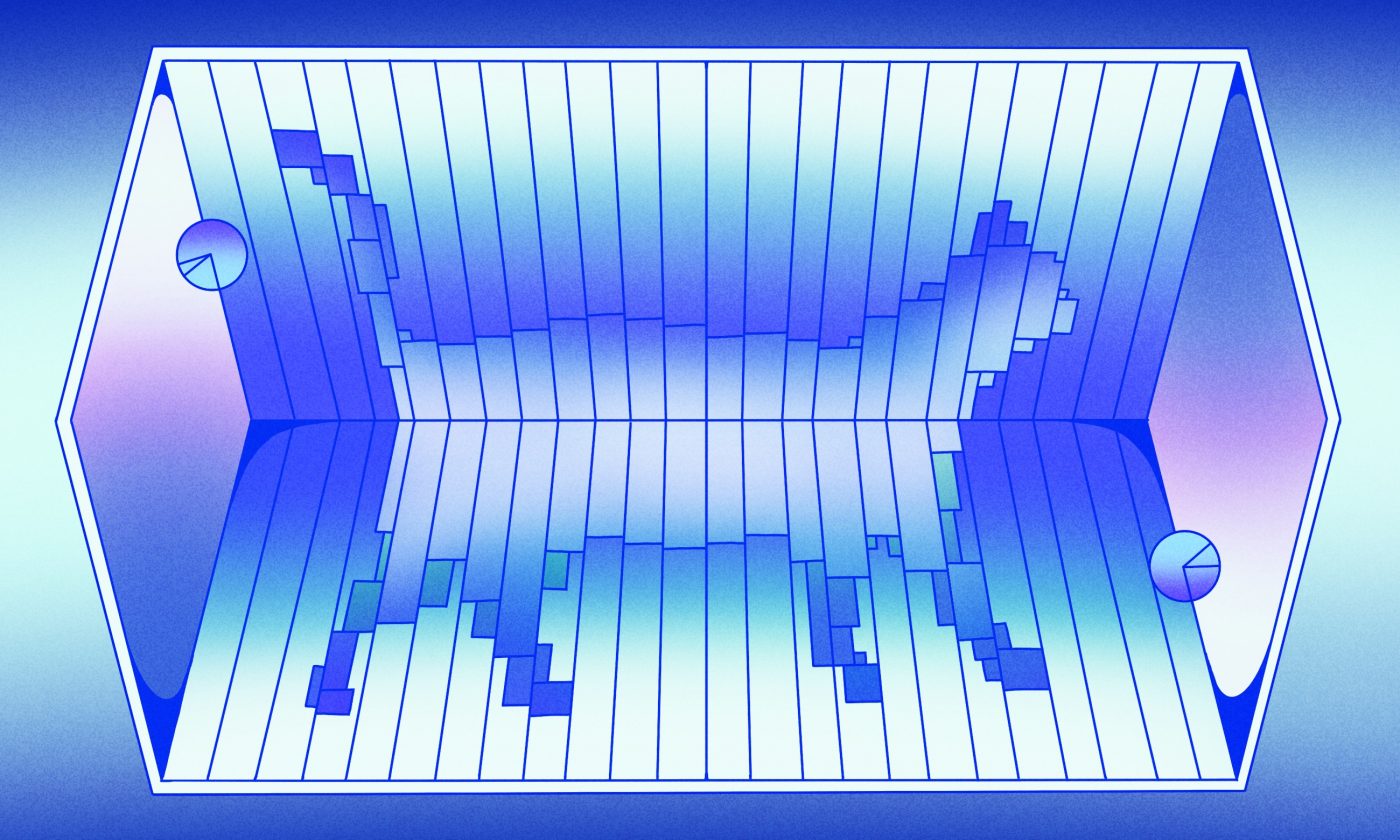

In the image below, you can see an example of the dashboard we are going to build in this blog.

Getting the definitions out of the way

Before we dive into the code, let’s define a few things so that we are all on the same page.

These are some of the terms we’ll be using:

- Cohort – in this context, a group of users that share the same characteristics. In our case, the date of first usage is the characteristic with which we will group users.

- CTE or Common Table Expression – a temporary table that is created by SELECT clause.

- Window function – an aggregate and ranking function over a particular window (set of rows).

- Upsert – a SQL clause that uses a combination of insert and update.

For this blog post, we will use mock data that represents the usage of 10 users using a non-existent application. If you want to code along, you can download the data from our repository at GitHub.

The source table we’ll use is based on the standard Firebase table schema, more on which you can learn in the official Firebase docs. The main differences between a real table and the table we are going to use are that:

- Our source table will only have the column that we actually need

- This data is something of a “perfect” case. It doesn’t have various edge cases that you would have with real data. The purpose here is to create an illustrative starting point for creating the customer retention dashboard

- In this non-existent application, we will only have default events. We won’t add more complexity with custom events

Viewing user data

Let’s imagine a situation where a stakeholder asks us to create a customer retention dashboard. However, the stakeholder has three requirements:

- They want to be able to filter data by user location and analytics consent

- They would like to see a graph with actual numbers and another one with percentages

- They want to be able to observe the trend on a monthly basis

Before every data-field project, we should ask ourselves whether we have the data that can support the client’s requirements. Based on these requirements, we need to collect the user activity data (scrolling, clicks, likes, screen view, etc.) and the source for the attributes by which stakeholders will filter.

Every project is different and can be set up in a different way, so you need to look at your infrastructure, see where your data is stored, and know how you can access it. We’ve based this tutorial on the Firebase Analytics service since it is well-known and widely used.

If you want to use Firebase you first need to do two important things. First, you have to integrate Firebase SDK with your application so that it can collect all the relevant events (the user data). Then, you will need to integrate Firebase with your warehouse so that the data can be stored in permanent storage. Firebase offers very seamless integration with Google Big Query, and that is the warehouse that we are going to use in this blog.

Using Firebase

When we integrate Firebase with BigQuery, we will see a table that has a lot of columns. Let’s go through the columns that we need in order to successfully create a customer retention dashboard.

- Event date column – a date in the format yyyymmdd

- Event timestamp column – number of microseconds (UNIX time)

- User pseudo ID column – application instance ID (this is not user ID, which is stored in a separate column that should be empty if the user didn’t give analytics consent)

- Geo column – contains all geo data (continent, country, etc). This is extracted from the IP address. From here, we will extract the user’s location

- User properties column – an array that contains all user attributes. From here, we will extract analytics consent attributes

You can learn more about Firebase with their official documentation.

Lastly, let’s define two more things. We will define our cohort by the first event, and we will define a user as active if they have at least one user engagement event. User_engenemant event is the default event that Firebase collects regardless of analytics consent.

Getting down to the code

First, we will do a simple version and then an optimized version of our customer retention dashboard. Let’s split the process into two steps: processing user activity, and calculating customer retention. The main reasons for this are separation of concerns and keeping the query simple so that when other people read the code, or if you return to it after a couple of months, it is much easier to understand.

Step #1: Processing user activities

Firebase collects a lot of events per user, and the more complex the app is, the more events there will be.

Remember, a user is active when there is at least one user engagement event. Since the stakeholders want to see monthly trends, in this step, we will look for all active users for each month. In other words, for each month, we need to create one row per user that has at least one event. It isn’t really important what time or date in the month an event happened; all that matters is whether or not the user performed an activity.

Once we have found all active users, we do a cross-join with all possible months and exclude invalid combinations. A combination is invalid if the year and month that we paired don’t match the event date year and month, e.g., if the event happened on 2023-06-23, we want to pair it with the date 2023-06-01, so that events are grouped according to month.

Furthermore, we need to find the cohort date for each user. Previously, we defined cohort as the date of the first event ever recorded for a user. Along with cohort, we also extract user attributes. Finally, we connect each user’s activity with cohort and attribute data. So, to summarize:

- Get one row for every active month

- Generate all months since the application release

- Cross-join generated dates with users’ activities, and exclude rows where year and month are different for generated date and user activity

- For each user, we find the cohort date, and we extract user attributes

- Aggregate with attributes

WITH events AS (

SELECT

user_pseudo_id,

PARSE_DATE('%Y%m%d', event_date) AS event_date,

event_timestamp,

e.geo.country AS geo_location,

(SELECT value.string_value FROM UNNEST(e.user_properties) WHERE key ="analytic_type")

AS analytics_consent

FROM `analytics.events_*` AS e

WHERE event_name = 'user_engagement'

GROUP BY 1, 2, 3, 4, 5

),

In the events CTE, we are extracting all relevant data that we will need in the later CTEs. The main reason for creating this CTE is to have a cleaner query overall so that you can change the source table much more easily.

The main action points here are:

- Unnesting the

user_propertiesfield in order to get theanalytics_consentattribute - Parsing the date value from the string (in

yyyymmddformat) toDATEobject, so that we can work with dates much more easily - Selecting all the columns that we need

generated_dates AS (

SELECT generated_date

FROM UNNEST(GENERATE_DATE_ARRAY(

'2023-01-01',

CURRENT_DATE(),

INTERVAL 1 MONTH)

) AS generated_date

),

In the generated_dates column, we generate all the months since the app was released. Look at the following CTE to see why we need this.

user_activities AS (

SELECT

user_pseudo_id,

generated_date AS first_date_of_active_month,

MAX(event_date) AS date_of_last_activity,

FROM events, generated_dates

WHERE EXTRACT(YEAR FROM event_date) = EXTRACT(YEAR FROM generated_date)

AND EXTRACT(MONTH FROM event_date) = EXTRACT(MONTH FROM generated_date)

GROUP BY 1, 2

),

Here, we are cross-joining data from events and generated_dates CTEs. Each row in the events CTE will have all the months since the app’s release.

After we cross-join this data, we filter out all invalid rows. A row is valid only when the year and month are the same for event_date and generated_date rows.

Lastly, we aggregate event_date by grouping by user_pseudo_id and generated_date (which we rename to first_date_of_active_month).

The final result for this CTE is that for each month when a user activity was recorded, there will be a corresponding row, and we will see the latest activity date in that active month (we don’t need the latest activity date column, but it can help when you are checking if a query works properly).

new_users_cohort AS (

SELECT

user_pseudo_id,

MIN(event_date) AS cohort_date, -- date of first event

MIN(geo_location) AS geo_location,

MIN(analytics_consent) AS analytics_consent

FROM events

GROUP BY 1

),

In the new_users_cohort CTE, we find a cohort for each user. If you remember, in the previous section, we defined cohort as a user’s first event. So here, we find the date of the first event for each user, along with all the attributes we need.

new_users_cohort_with_activities AS (

SELECT cohort.user_pseudo_id,

cohort.cohort_date,

activities.first_date_of_active_month,

activities.date_of_last_activity,

cohort.geo_location,

cohort.analytics_consent

FROM new_users_cohort AS cohort

LEFT JOIN user_activities AS activities

ON cohort.user_pseudo_id = activities.user_pseudo_id

), In the last CTE, we combined all the data together. Here, we connect user cohorts with user activities.

SELECT EXTRACT(YEAR FROM cohort_date) AS cohort_year,

EXTRACT(MONTH FROM cohort_date) AS cohort_month,

user_pseudo_id,

first_date_of_active_month,

date_of_last_activity,

analytics_consent,

geo_location

FROM new_users_cohort_with_activities

In the final query, we select all the data together. In this step, we also extract all the necessary data (in this case, cohort_year and cohort_month).

Step #2: Calculating customer retention

We can calculate customer retention now that we have collected user activity data. This step is much easier and simpler. We follow this logic:

- calculate the number of users for each

cohort_year,cohort_month,first_date_of_active_month,analytics_consent, andgeo_location - calculate the difference in months between

first_date_of_active_monthand cohort date - find

max_number_of_users

WITH calculated_number_of_users AS (

SELECT

cohort_year,

cohort_month,

first_date_of_active_month,

COUNT(DISTINCT user_pseudo_id) AS number_of_users,

analytics_consent,

geo_location

FROM `dashboards.UserRetention_UsersActivities`

GROUP BY 1, 2, 3, 5, 6

),

In the calculated_number_of_users CTE, we calculate the number of users for cohort and attributes. The source in this CTE is the result of a query that processes user activity.

calculated_month_diff AS (

SELECT cohort_year,

cohort_month,

DATE_DIFF(first_date_of_active_month, DATE(cohort_year, cohort_month, 1), MONTH) AS month_diff,

number_of_users,

analytics_consent,

geo_location

FROM calculated_number_of_users AS d

),

In the calculated_month_diff CTE, we calculate the difference in months between first_date_of_active_month and cohort date DATE(cohort_year, cohort_month, 1).

max_number_of_users_for_cohort AS (

SELECT *

FROM calculated_month_diff

WHERE month_diff = 0

)

In this CTE, we find the maximum number of users. This is the number of users from the first cohort, or where month_diff is zero.

SELECT

d.cohort_year AS cohort_year,

d.cohort_month AS cohort_month,

d.month_diff AS month_diff,

d.number_of_users AS number_of_users,

m.number_of_users AS max_number_of_users,

d.analytics_consent AS analytics_consent,

d.geo_location AS geo_location

FROM calculated_month_diff AS d

LEFT JOIN max_number_of_users_for_cohort m

ON d.cohort_year = m.cohort_year

AND d.cohort_month = m.cohort_month

AND d.analytics_consent = m.analytics_consent

AND d.geo_location = m.geo_location

Lastly, we combine the calculated_month_diff and max_number_of_users_for_cohort CTEs in order to get the final data.

We finally come to the point where we have transformed the data into a form that we can use in the customer retention dashboard. Looker Studio offers a pivot table with a heatmap, which is the graph we will use to visualize our data. Once we set up a pivot table, we will see a dashboard that looks something like this:

If you look closely you will see one big issue – the percentage table does not show the correct values. However, when you dig deeper and look at the data you will see that our query does work properly; it just doesn’t cover one case that may occur.

The formula that we are using for calculating percentage in Looker Studio looks like this: SUM(number_of_users) / SUM(max_number_of_users). So, if we want a correct percentage, we need the sum of all users for each month_diff and each cohort and the sum of all users for each cohort.

This potential case is very specific. The query for calculating retention will calculate the number of active users and find the maximum number of users for each cohort_year, cohort_month, month_diff, and all the attributes, and then create a row for each combination that exists. The problem occurs when there are no active users for a specific cohort_year, cohort_month, month_diff, or attributes in the source data (data collected by Firebase).

If we don’t have user data in the data collected (in this case, because no users were active), no row will be created. Therefore, for sum(max_number_of_users), we are only taking combinations of cohort_year, cohort_month, month_diff, and all the attributes that recorded at least one active user. What we need to do is generate rows for combinations that did not have any active users for that month.

Here is an additional query to make sure that we have all the data:

MERGE `dashboards.UserRetention` AS existing_data

USING ( This query will append the missing data to the table. We achieve this by using an upsert query:

WITH month_zero_data AS (

SELECT *

FROM `dashboards.UserRetention`

WHERE month_diff = 0

),

Firstly, we must select all the data that has month_diff zero, because those are the cohorts for all combinations of existing attributes.

generated_month_diff_options AS (

SELECT generated_month_diff

FROM UNNEST(

GENERATE_ARRAY

(

0,

(SELECT DATE_DIFF(

CURRENT_DATE(),

(

SELECT MIN(cohort_date)

FROM (

SELECT DATE(cohort_year, cohort_month, 1) AS cohort_date

FROM `dashboards.UserRetention`

)

),

MONTH)

)

)

) AS generated_month_diff

)

We also need to generate all possible instances of month_diff. So, in the generated_month_diff_options CTE, we generate integer values from zero to the maximum month_diff value that exists. We calculate the maximum month_diff possible by calculating the difference between the first cohort date DATE(cohort_year, cohort_month, 1) and today’s date.

SELECT cohort_year,

cohort_month,

generated_month_diff AS month_diff,

0 AS number_of_users,

max_number_of_users,

analytics_consent,

geo_location

FROM month_zero_data, generated_month_diff_options

WHERE generated_month_diff != 0 AND generated_month_diff <= DATE_DIFF(CURRENT_DATE(), DATE(cohort_year, cohort_month, 1), MONTH)

) AS new_data

In the main query, we cross-join month_zero_data and generated_month_diff_options, and we exclude months that haven’t happened yet.

ON existing_data.cohort_year = new_data.cohort_year

AND existing_data.cohort_month = new_data.cohort_month

AND existing_data.month_diff = new_data.month_diff

AND existing_data.max_number_of_users = new_data.max_number_of_users

AND existing_data.analytics_consent = new_data.analytics_consent

AND existing_data.geo_location = new_data.geo_location

WHEN NOT MATCHED THEN

INSERT VALUES(cohort_year, cohort_month, month_diff, number_of_users, max_number_of_users, analytics_consent, geo_location); Lastly, we do an upsert condition. So, when a row does not exist, we simply insert that row. If a row exists, it means that for that cohort, with those attributes, there is at least one user that was active.

Query efficiency

This solution is perfectly fine for an app that has a small number of users (from thousands to tens of thousands). However, as applications scale up (hundred of thousands to millions of users) so does data. This query can very easily get to the point of processing hundreds of gigabytes of data, which can be very expensive when run on a daily basis.

In order to avoid that happening in the future, we need to optimize our queries. Those that we’ve created so far are non-iterative, meaning that every time they are run, we are using all the data from the source, which in reality, we don’t need. Recent source data (such as from the last week or month) is more than enough for refreshing destination tables that are used in the dashboard. How much older data we actually need depends on how often we are refreshing the data.

Investing our time in converting queries for processing user activity from non-iterative to iterative will bring the biggest benefit here. So, in the following part, we will focus on this conversion. If you want to look at the code for converting a query from non-iterative to iterative, you’ll find it in the repository.

Converting query from non-iterative to iterative

All the queries are available in the repository, so there’s no need to get into them here. However, we will go through the logic used. It is very similar to the previous logic.

- Get one row for every active month (but using only recent data)

- We generate all dates that have happened between the last query run and today

- Cross-join generated dates with user activities, and exclude rows where year and month are different for generated date and user activity

- Do a full join with the destination table

- If a user exists, take cohort data along with attributes from the destination table

- If they do not exist, this means this is a new user, find a cohort for this user and extract attributes

- Aggregate new user activities with attributes

- Combine new users with existing users

Data quality is a prerequisite

So far, we have talked about how to handle data so that it tells us how engaged our users are, but there is one very crucial component in this whole story – data quality. It can take a lot of time and resources to set up infrastructure, integrate our application with Firebase, cover all edge cases, etc. However, if we don’t ensure the quality of our data, all that time and resources will go to waste. Low-quality data is not accurate nor reliable, and accuracy and reliability are crucial in a data-driven project.

Make your customer retention dashboard yours

A customer retention dashboard is a very useful tool to know how engaged our users are. As we have seen, the process itself is not very complex. However, bear in mind that the queries shown are not production-ready. They are written for “perfect” data, which does not exist in the real world. Accurate data brings forth many edge cases that we did not cover here for the sake of brevity.

Ultimately, each organization has different data and requirements. This article should provide a good starting point for establishing a customer retention dashboard, which is crucial for diagnosing and acting upon your customer retention data.