Since the release of ARKit in 2017., and especially the 2.0 announcement during the WWDC 2018 conference, I’ve been interested in what it can provide.

At first, I didn’t know what I was getting myself into. Coming from a computer science background with some minor experience in computer graphics and object rendering, I was expecting a lot of mathematical black magic. Turns out I was wrong.

Apple, always on the lookout to simplify things, built a framework that’s intuitive and easy to use but packed with powerful features at the same time. If you know your way around XCode and the basics of iOS development, you could be up and running in no time, even if you haven’t touched AR or 3D modeling before.

Demystifying Augmented Reality

So what is augmented reality? A short definition I found on whatis explains it quite nicely:

Augmented reality is the integration of digital information with the user’s physical environment in real-time.

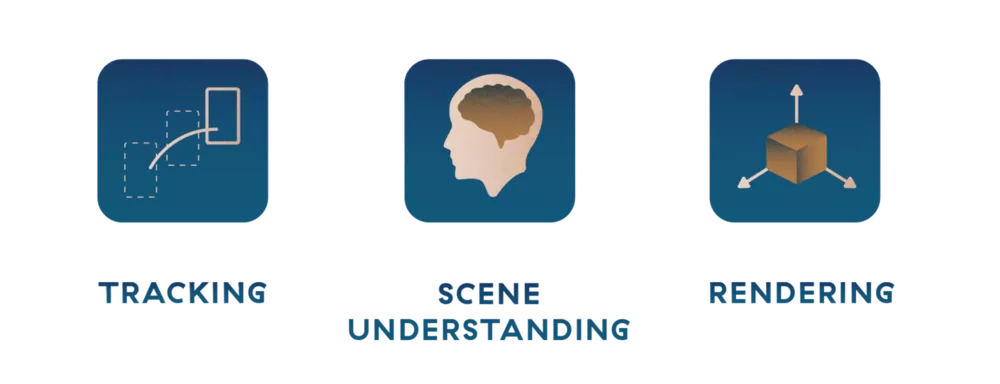

How is it actually achieved? Before jumping into the code, there are three core pillars we need to understand:

1

Tracking provides the ability to track the device’s relative position in the physical environment using camera images, as well as motion data from its sensors. This data is then processed and used to get a precise view of where the device is located and its orientation.

2

Scene understanding builds upon tracking. It’s the ability to determine and extract attributes or properties from the environment around the device, for example, plane detection (e.g., walls, tables, chairs), collision detection, etc.

3

Rendering, the last pillar of AR, is used to present digital information in the real world. For the purposes of this tutorial, I’ll be using SceneKit, but virtually any household (SpriteKit, Unity, UnReal, etc.) or custom rendering engine can be used.

ARKit in action

Now that we’ve covered the basics, let’s put ARKit into the picture. The framework does the heavy lifting regarding tracking and scene understanding. Its job is to combine motion tracking, camera scene capture, advanced scene processing, and display conveniences to simplify the task of building an AR experience.

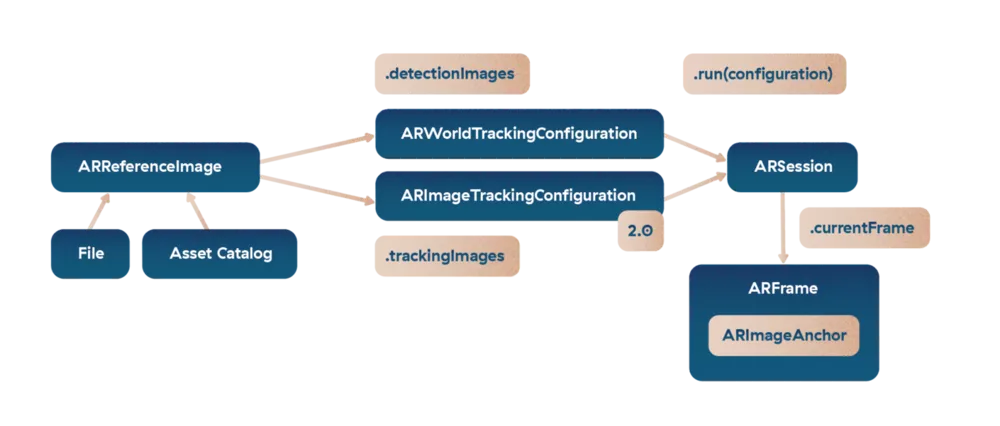

There are two ways to implement image detection through its configurations – you could use either ARWorldTrackingConfiguration or ARImageTrackingConfiguration.

World tracking works best in a stable, non-moving environment, but it struggles if the tracked image starts to move around. That’s why ARKit 2.0 introduced the image tracking configuration. It uses known images to add virtual content to the 3D world and continues to track the position of that content even when the image position changes, simultaneously providing a steady 60 frames per second.

I think we’ve covered enough theory to get started, so let’s dive into some code. We’re going to be building an AR app for tracking and identifying Pokémon in the wild. Just like in the cartoons!

Getting our hands dirty

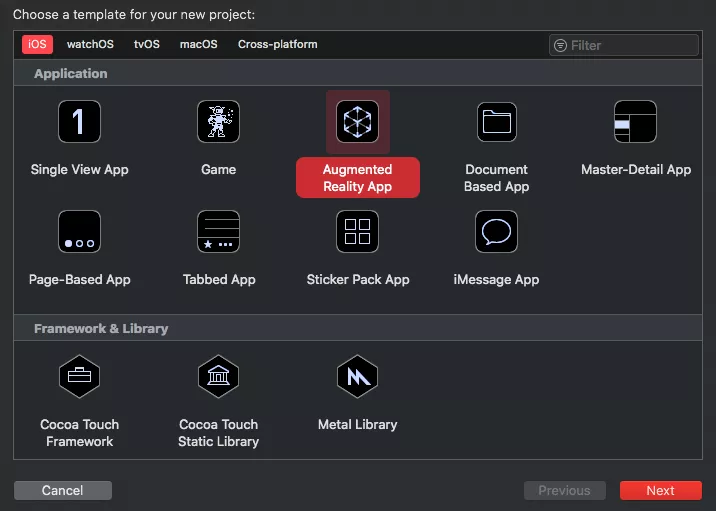

To start things off, I suggest you use the Augmented Reality app template. XCode will create a view controller with a ARSCNView and a starting ARWorldTrackingConfiguration.

From the visual standpoint, this should be enough for this tutorial so I’ll leave the storyboard file as it is.

We’ll be focusing on image tracking, so we can pretty much delete all the boilerplate code from the view controller and start only with the basic viewDidLoad() and viewWillAppear() methods.

override func viewDidLoad() {

super.viewDidLoad()

sceneView.delegate = self

}

override func viewWillAppear(_ animated: Bool) {

super.viewWillAppear(animated)

sceneView.session.run(configuration)

}

In viewDidLoad() we set the sceneView’s delegate. ARSCNViewDelegate has a set of methods that we can implement to find out whenever an anchor/ node pair has been added, updated, or removed from the scene. We’ll get to this in a minute.

viewWillAppear() implementation is also rather short. Simply tell the sceneView to start AR processing for the session with the specified configuration and optional options (since we don’t need them here, they’re omitted).

Our configuration is defined separately as a computed property for better readability. This is what it looks like:

private let configuration: ARImageTrackingConfiguration = {

guard let trackingImages = ARReferenceImage.referenceImages(

inGroupNamed: "Pokemons",

bundle: nil

)

else { fatalError("Couldn’t load tracking images.") }

let configuration = ARImageTrackingConfiguration()

configuration.trackingImages = trackingImages

configuration.maximumNumberOfTrackedImages = 2

return configuration

}()

The first thing we need to do regarding the configuration is to specify which images we want to track. These images can either be loaded from a file or added to the Asset Catalog and then loaded as a group. I’ve decided to do the latter.

After the images are loaded successfully, image tracking configuration is created and its trackingImages parameter is set to those loaded images. One more thing we need to specify is the maximumNumberOfTrackedImages property, which is used to specify how many images we wish to track simultaneously. If more than this number of images is visible, only the images already being tracked will continue to be tracked until either the tracking is lost, or the image is removed from the specified trackingImages. This number ranges from 1 (default) to 4. My guess is, as hardware and software evolves, there’ll be an option to track even more images at the same time.

Let the tracking begin

As I’ve mentioned previously, ARSCNViewDelegate provides a lot of methods for mediating the automatic synchronization of SceneKit content with an AR session. We’ll be focusing on the renderer:nodeForAnchor: method implementation, so let’s look at how we can handle adding nodes to the existing AR scene.

Here is the overview of our node creation:

func renderer(_ renderer: SCNSceneRenderer, nodeFor anchor: ARAnchor) -> SCNNode? {

guard let imageAnchor = anchor as? ARImageAnchor,

let pokemonName = imageAnchor.referenceImage.name,

let pokemonURL = URL(

string: "https://pokemondb.net/pokedex/" + pokemonName.lowercased()

)

else { return nil }

let pokemon = Pokemon(title: pokemonName, URL: pokemonURL)

let node = SCNNode()

let overlayPlane = SCNPlane(

width: imageAnchor.referenceImage.physicalSize.width,

height: imageAnchor.referenceImage.physicalSize.height

)

// Add the blue-tinted overlay node.

let overlayPlaneNode = createOverlayPlaneNode(

for: overlayPlane,

pokemon: pokemon,

imageAnchor: imageAnchor

)

// Add the text node displaying what image is currently tracked.

let textNode = createTextNode(for: pokemon.title.capitalized)

textNode.pivotOnTopCenter() // Move the point from which calculations are done.

textNode.position.y -= Float(overlayPlane.height / 2) + 0.005

overlayPlaneNode.addChildNode(textNode)

// Add the webView node displaying the Pokemon details.

let webViewNode = createWebViewNode(overlayPlane: overlayPlane)

overlayPlaneNode.addChildNode(webViewNode)

node.addChildNode(overlayPlaneNode)

return node

}

Let’s unpack what happens here, section-by-section. Before we get to the interesting stuff, we need to check three things:

1

the received anchor object can actually be cast to an ARImageAnchor object;

2

that image anchor object must have a name (specified in the Asset catalog) since that’s how we differentiate detected objects;

3

an URL for finding the Pokémon on the Pokédex web page (I’m using the PokémonDB as the baseURL here) can be successfully created.

After passing all these checks, we can now start with the fun stuff. Firstly, we create our Pokémon object and then an empty SCNNode object which represents all the AR data we will show for that tracked Pokémon. This node will be a container node, which means its sole purpose is to hold a hierarchy of other nodes.

The next step is adding the overlay node:

func createOverlayPlaneNode(for plane: SCNPlane, pokemon: Pokemon, imageAnchor: ARImageAnchor) -> SCNNode {

plane.firstMaterial?.diffuse.contents = UIColor.blue.withAlphaComponent(0.5)

let planeNode = SCNNode(geometry: plane)

// Rotates the plane by -90 degrees

planeNode.eulerAngles.x = -.pi / 2

return planeNode

}

As its name suggests, this overlay node is laid over the tracked image. We specify its geometry to be a plane, with its width and height being the same as the image’s. After specifying the size, we can set its firstMaterial?.diffuse.contents property a wide array of objects we wish to use – current ARKit implementation allows us to use a color, an image, layer, scene, path, texture, video player etc..

In this implementation, we will add a blue-tinted overlay showing where the tracked image is located. Another interesting option would be to set an AVPlayer as the plane material, which would then play a video connected to the specific Pokémon. Who wouldn’t like if their Charizard started spitting fire when detected? Since we’re covering the basics here, I’ll leave that out for now.

Another useful thing to add is the text node that would tell us which Pokémon is being tracked at the time since there could be more of them.

func textNode(for text: String) -> SCNNode {

let sceneText = SCNText(string: text, extrusionDepth: 0.0)

sceneText.font = UIFont.boldSystemFont(ofSize: 10)

sceneText.flatness = 0.1

let textNode = SCNNode(geometry: sceneText)

textNode.scale = SCNVector3(x: 0.002, y: 0.002, z: 0.002)

return textNode

}

The procedure is similar to adding the overlay node. First we need to create a geometry – this time it’s a text geometry using our Pokémon’s name. We set the proper font, flatness (accuracy or smoothness of the text geometry) and the text scaling – since SceneKit deals in meters by default, we need to scale it waaay down.

If you’ve implemented everything correctly along the way, this is what should happen when you detect your first image:

Who is that Pokémon?

The final piece of our Pokémon puzzle we’re missing is actually something which would show us the information about the detected pokemon using PokémonDB, right here inside our AR experience. We can do that by using our newly acquired knowledge about plane and node creation, with making some slight modifications:

- instead of using an UIColor as the plane material for this new node, this time we are going to use a UIWebView (at the time of writing this article, WKWebView for some reason still isn’t supported inside ARSessions and we get just a grey, empty plane if we try to use it – Apple, some consistency would be appreciated);

- change the webView node’s position parameters to be either left or right of the detected image, to improve visibility;

- you could also modify the plane’s width and height parameters to provide better readability of the page.

After playing around with it a little, your final result should look something like this:

And voilà, you’ve just created your first AR experience. It wasn’t that hard, was it? This sneak peek into AR is just scratching the surface of what’s possible, and as time goes on, more and more options are going to be available to us developers.

The future is augmented

To wrap things up, I’d like to share my own opinion about the future of AR. As such, take it with a grain of salt.

“Is AR actually going to be the future of mobile applications?”

This question has started to pop up lately, and even though I don’t have a magic ball to tell the future, looking from the number of devices that currently support ARKit, it really doesn’t seem too far-fetched. Everyone with an iPhone running iOS 12 can enjoy these experiences, and younger generations are probably going to be (if they aren’t already) crazy about them.

However, the whole concept of AR on mobile phones has one major issue – the practicality of use. It’s too impractical to walk around with your phone pointed at different things all the time, experiencing the real world while interacting with its augmentations through a screen. In my opinion, the way we interact with our phones is not really going to change that much, but instead, something else will rise up to augmented reality’s call – AR glasses.

Google tried it a few years ago, but it was just ahead of its time for multiple reasons. Nevertheless, with the way mobile AR is advancing, it’s just a matter of time before another major tech player announces their own AR glasses. Judging by the information coming from this year’s CES, Apple seems to be holding their cards close to their chest and are waiting for the perfect moment to jump into the AR game.

What will happen next? We don’t know that yet, but we’ll probably look back at today and think of it as a learning experience for things to come.