AI’s early days were filled with lofty promises, fears of domination, and hopes of revolution. Two years later, we’re no longer holding our breath. The hype cycle has moved on, and it’s time to talk about what comes after the spectacle.

In November 2022, the launch of ChatGPT kicked off a generative AI frenzy that had everyone in awe. Would artificial intelligence replace us? Would it kill us? Would it solve everything from the climate crisis to cancer?

Some two years later, we’re still here, and unfortunately, so is cancer and climate change. We’ve seen some impressive AI successes and plenty of flops. Now, when we talk about AI, it’s no longer with that giddy mix of excitement, fear, and uncertainty – it’s more of a ‘business as usual’ approach.

AI tools have become the new normal, which is also… normal. What we’re currently witnessing is part of a broader pattern known as the hype cycle.

It comes in cycles

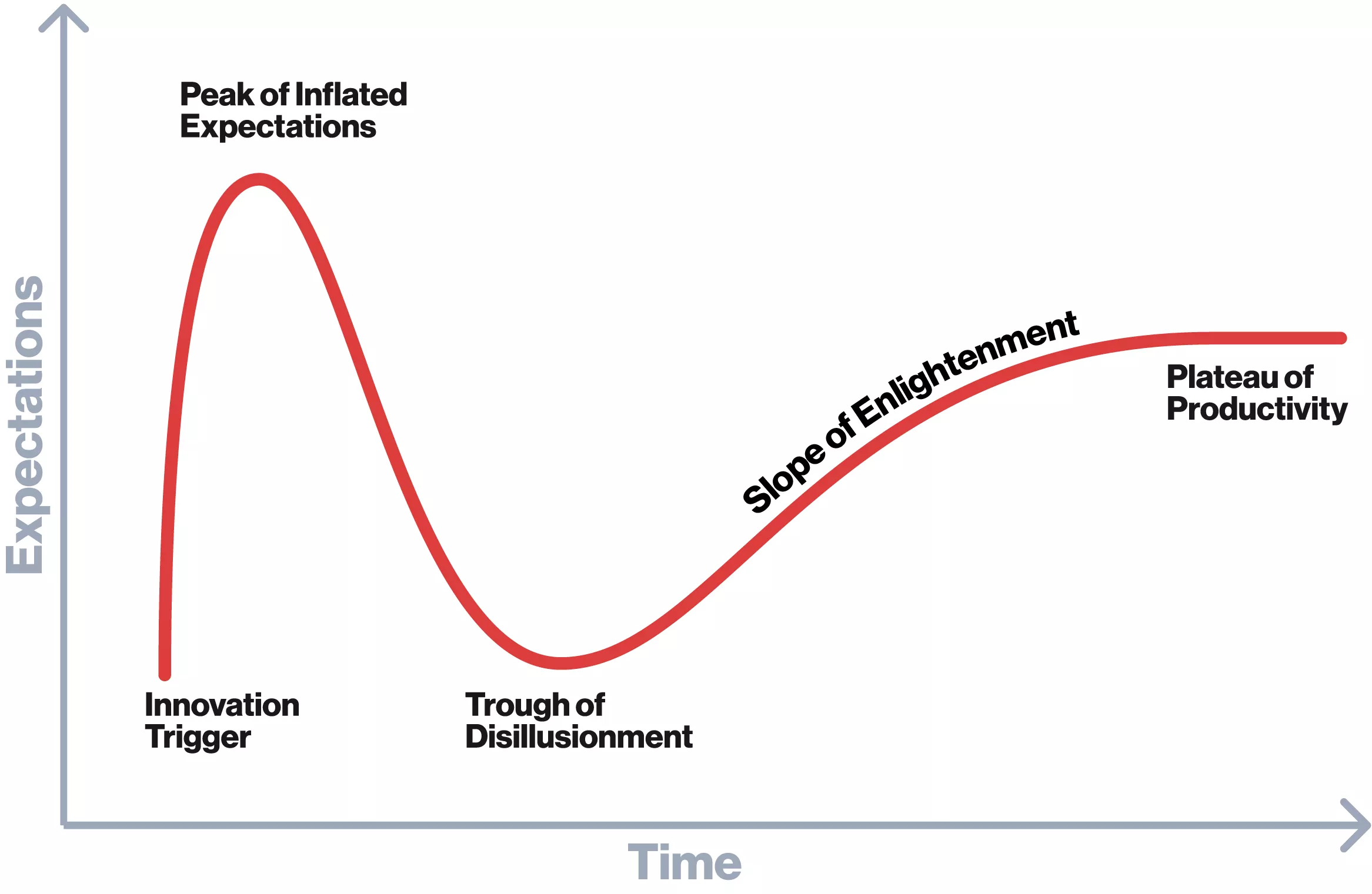

The concept of a hype cycle was first introduced by the American research company Gartner. It describes the typical progression of new technologies, from early excitement and inflated expectations, through eventual disillusionment, and finally to a stable, productive use.

We’ve seen this happen with technologies like cloud computing, which was initially hyped as a revolutionary shift in IT infrastructure, faced skepticism about security and reliability, and eventually became a standard in how businesses operate today. The same pattern could have been observed with virtual reality, the Internet of Things, and even the internet itself.

Past the hype and into reality

So, where is artificial intelligence in the hype cycle? According to the Gartner report published this summer, generative AI has passed the Peak of Inflated Expectations and is entering the Trough of Disillusionment phase, where real challenges and limitations come to the forefront. Meanwhile, other AI technologies, such as quantum machine learning and edge AI, are still climbing toward their peak, with many advancements yet to come.

In other words, the honeymoon is over. AI has shown great potential, but now we’re in the part of the relationship where the real work begins. And the real work, in this case, includes managing risks related to AI systems and ensuring the technology is implemented safely and effectively.

One of the challenges we’re still dealing with is AI’s tendency to hallucinate, i.e., generate information that sounds plausible but is completely wrong. In enterprise settings, this isn’t just a bug or an inconvenience; it can be a lawsuit waiting to happen. Air Canada learned this the hard way when a court ordered them to pay damages after their AI chatbot incorrectly promised a customer a refund that wasn’t in line with company policy.

Then there’s the whole matter of compliance and regulation – adhering to the ever-growing list of AI laws and regulations that countries are rolling out. Unlike the fear of AI taking over the world, which is easy to dismiss, regulatory fines are as real as it gets – and they can make a noticeable dent in your bottom line.

We’ve also come to know shadow AI – unauthorized or ad-hoc use of AI tools within an organization. With GenAI tools just a browser tab away, employees are easily tempted to use them for drafting copy, generating images, or writing code. However, by doing so, they might inadvertently be training the model on proprietary or confidential data and exposing the company to data leaks, security breaches, and other serious risks.

These challenges aren’t just theoretical – they’re obstacles that need tackling if we want to move our relationship with AI past the honeymoon phase and turn it into a long-term, stable partnership. The rose-colored glasses are off, and comfy sweatpants are on. It’s time to make this AI thing work – reliably, safely, and without surprises.