Machine learning – a frequently (ab)used buzzword you hear related to new software applications, often accompanied by claims like “This product is driven by data and deep learning,” “Our app is intelligent, and it adapts to your needs,” or “Neural networks are the brains behind this application,” etc.

The term gained popularity because computing power and machine learning made incredible/crazy progress very quickly, making it possible to apply machine learning to mobile applications.

So, how does one get the idea to create applications based on machine learning? Or to add new features to existing applications backed by machine learning? And how does the development of a machine learning model fit into the usual application development process?

We developed a mobile app and built its main feature around machine learning. This is its story, from conception to completion.

Getting started with face recognition

A year and a half ago, the R&D team at Infinum was brainstorming projects and ideas and decided to go for a project with face recognition. The area is already well researched with many examples of successful use, but we were confident we’d find some complex problem to solve and our niche in the field.

We came up with the idea of building a mobile app that will use facial recognition in real-time video, but with some conditions. The model must work offline, and its performance needs to be stellar.

This is a problem because we only have mobile phone CPU power at our disposal for an already complex task. Do you unlock your phone with face recognition? If so, do you get frustrated the phone’s facial recognition system doesn’t recognize you. And that task is considered “simple” because it only requires one binary decision: whether the person in front of the camera is the phone owner or not.

Our system needed to be much more complex because it had to recognize many different persons. After a lot of hard work, experiments with different pre-trained face recognition models like Facenet, OpenFace, and VggFace, several preprocessing steps to improve accuracy and speed, we finally succeeded and created a simple one-screen mobile app that recognizes multiple persons in real-time video stream.

Detecting similarity between different individuals

We were ecstatic after that triumph and wanted to apply what we learned in a fantastic app. We invited Infinum’s marketing team to help us. After a lot of brainstorming, we identified a winner.

Facial recognition models try to identify individual identities. But what if we spiced things up and tried to detect similarities between several individuals? More precisely, the similarity between parents and their children.

How many times were you involved in a family debate about who resembles whom? Does a newborn baby look like their father or mother? This discussion often ends in disagreement. In my dear family, it takes absurd proportions.

My whole family says that I am the spitting image of my father, except I have my mother’s blue eyes. And you thought this only happened to you, Harry Potter? Anyway, almost everybody agrees on that. My dear blue-eyed grandpa says I have his eyes.

Wouldn’t it be nice to have an objective judge to decide and solve this problem for us, preferably based on science?

Execution time

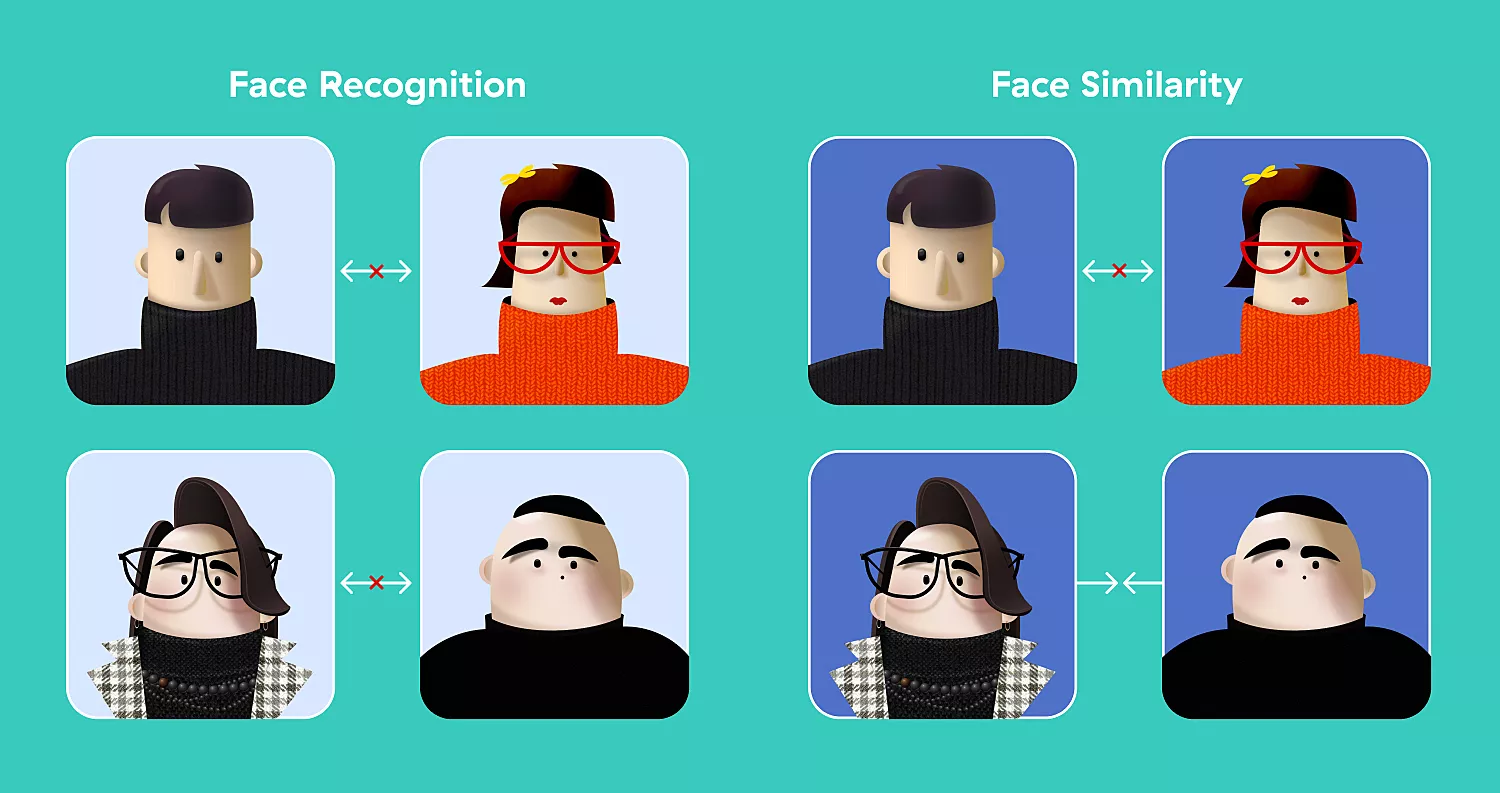

Because we reframed the task and switched from face recognition to face similarity, our R&D had to develop new models suitable for tracing similarities between parents and their children. How to solve this complex problem?

Many good ideas were bounced around, but most of them weren’t good enough. Still, we succeeded. I’ll describe the process from the initial solutions to the final one used in the app.

The initial idea

You could say that the face recognition task is highly correlated with the face similarity task. Just compare the model output of a child with the outputs of their parents and calculate which parent’s output is more similar to the child’s. That was our first experiment.

Although the idea was good, the evaluation results were not very promising. One reason is that pre-trained facial recognition models are unable to recognize children. We learned the other reason through our own experiments and scientific papers dealing with this problem.

Recognition models are trained to learn that the distance between two face images of the same person is close, and the distance between two images of different people is longer. If images of two different people are far enough, the recognition model has no motivation to rank faces according to their perceived similarity.

The second attempt

Our next idea was to find a dataset of similar people to relearn our recognition models, so we can model similarities between two different people. Unfortunately, no such dataset exists. We did find the next best thing, however – a dataset of families with information about kinship between two different people.

Our premise was that related people generally resemble each other more than people with no blood relation.

We developed a new model on this dataset and proceeded with the evaluation of results. It was better than the “vanilla” face recognition model, but still not good enough. This dataset had some images of people of younger age, which does benefit our model, but the majority of images were those of adults. Another drawback was the images’ low resolution.

Third time lucky? Large Age-Gap Face Verification

While we were exploring different datasets and problems that could be useful, we stumbled upon an interesting problem called LAG – Large Age-Gap Face Verification. LAG tries to solve the problem of face verification of specific individuals with large age gaps. In other words, it aims to verify whether an image of a child and one of an adult capture the same person.

This was very similar to our problem. We wanted to know the similarity between a child’s image and the parent’s image, and the LAG problem finds similarities between an adult (a “parent”) and the younger version of themselves (a “child”). Happily, LAG comes with a dataset, so we got on the model development.

The new model performance also showed improvement in the baseline face recognition model. Still, we were not satisfied and wanted something better. We also tried to combine the kinship model and the LAG model, but the increase in performance was not as significant.

The solution in its final form

We discussed how to achieve satisfactory results and agreed that the main problem is the lack of representative data. Both LAG and kinship datasets had too few images of children. Also, although they are great ideas, we find that both LAG and Kinship are slightly different from the problem of finding similarities between children and their parents.

For the problem we are trying to try to solve, our model needs to be learned directly. With the help of Infinum parents, we were able to collect a dataset of parents and children, which we believe was big enough for learning a new model. The evaluation showed that this new model was significantly better than the baseline model but also considerably better than both Kinship and LAG models. Finally, we had a model for our application.

Along with models for face similarity, we created models for eye similarity and nose similarity. My dear mother can now finally prove that I have her eyes.

See the app in action

The final solution was incorporated into the iOS application (with the help of a CoreML framework) with the following workflow:

1

Upload images of a child and both parents. You can also add participant names for a better experience. The images are not saved or used anywhere else.

2

The application uses a face detection model to extract the face, eyes, and nose from the images of all three participants.

3

Our final solution models then calculate the features of each extracted face component for all three participants.

4

The calculated features are compared between participants using mathematical models, with similarity between a child and their parents as the result.

All the while, no images are transmitted to or stored on our servers, and all calculation is performed locally on-device.

The final result shows the similarity between a child and both parents for all three face components, along with the declared winner. The happy parent gets bragging rights forever!

R&D + the mobile team = success

Communication and cooperation between R&D and the mobile team were similar to the usual communication between the backend team and the mobile team. R&D provided a model and defined the expected input and output. The mobile team called our model while following defined rules.

At first, the only difference was that the mobile team requested models for information, not backend API. But an important thing we learned from this project is that the R&D needs to do a research phase.

The following development phase is performed by all parties, but only if positive results are found.

The effectiveness of machine learning projects deeply depends on data quality, the nature of the problem, and if the content of data is representative of the problem. We rushed into the development phase without meeting these conditions, so at one point we were met with the possibility that we wasted the design and mobile team’s resources for nothing.

Risk-takers are deal-makers

This uncertainty is one of the biggest problems in getting new machine learning projects to work on. It complicates negotiations with potential new clients because you often can’t be sure if a project/idea is even feasible with the existing data and infrastructure.

In a nutshell, a machine learning project for a client can have three outcomes:

- The solution is working as per client’s wishes.

- The client’s problem is impossible to solve with machine learning.

- The solution is good enough to help a client, but not as great as they expected.

This makes it hard to land new jobs for machine learning teams, so they often need to work on internal projects to sharpen their skills and stay in touch with new technologies and methods in the field.

An entire app built around machine learning

Machine learning has a huge impact on the development of mobile and web applications today. Sometimes you need it to optimize some part of the application to improve its performance or increase app retention (see our previous blog posts). But sometimes, you build your whole application around machine learning.

By giving yourself complex challenges and hard tasks, you can stumble upon an idea that can be translated into an application.

And hard as it may be, getting an innovative idea for an application is only the beginning. Next, you need to verify if your idea is feasible with the available data and machine learning methods.

If the answer is yes, you need to have a setting to test the performance of different models and approaches that represent your solution. All this requires a structured approach to machine learning projects that differs from the usual software engineering projects. But don’t be discouraged. You learn by trying.

Oh, and if you want to try the application described in this post and settle some century-long arguments, it goes by the name of Got Your Nose and it is available for iOS devices.

A big thank you to Marko Kršul for creating the visual identity of Got Your Nose app.