From Eye Tracking and Music Haptics to CarPlay updates, the new accessibility features in iOS 18 promise to transform how users interact with their devices, further strengthening Apple’s position as an accessibility powerhouse.

Apple’s history of pioneering accessibility features for iOS dates all the way back to 2009. That was when the launch of the iPhone 3GS introduced VoiceOver – still a flagship feature that is typically the first one to become supported in most apps.

In a recent update, 15 years after the initial VoiceOver release, Apple announced new accessibility features in iOS 18 and iPadOS 18, coming this fall. They are some of the most innovative and complex ones so far.

Since we got the opportunity to try out the features during beta testing, we can confirm from experience that they are indeed quite impressive. Have you ever imagined being able to control your phone using just your eyes? Or feel the music? By leveraging the power of artificial intelligence, Apple’s ecosystem is becoming more accessible to even more people, and this year feels like a major milestone in the Cupertino company’s accessibility journey.

Eye Tracking

A truly revolutionary feature that was originally developed for Apple Vision Pro made its way to iOS and iPadOS. Designed for people with physical disabilities, Eye Tracking offers a completely new way of controlling our phone or tablet. Users can move through app elements and utilize Dwell Control to select each one, enabling additional functions like physical buttons, swipes, and other gestures using only their eyes.

Eye Tracking is set up in less than a minute and uses a front-facing camera with advanced AI algorithms to detect and interpret eye movements. It comes with a set of gestures like looking at specific areas or blinking, and you can customize the sensibility and accuracy according to preference.

Further, Eye Tracking can conveniently be triggered from shortcuts or Siri and is integrated with AssistiveTouch, a virtual, on-screen menu that allows users to perform various actions, such as accessing the Home screen, adjusting volume, taking screenshots, and using gestures, without needing to use physical buttons.

Having tested the feature in the very first beta release, we must admit that it still feels a little rough around the edges. However, it will undoubtedly prove very handy in many use cases, which can also be said for Music Haptics.

Music Haptics

The feature introduces a new way for people who are deaf or hard of hearing to experience music on the iPhone. When Music Haptics is turned on, the iPhone will use its Taptic Engine to work in sync with the audio to generate patterns of taps, textures, and vibrations.

The feature not only enhances the Apple Music experience but can add another dimension to sound in third-party apps. Developers can take advantage of Apple’s API and add Music Haptics to their own music applications.

We should note that this is an iPhone-only feature because the hardware that supports it is not included on the iPad.

Listen for Atypical Speech & Vocal Shortcuts

Introduced in May 2023, Atypical Speech has already been available in iOS 17. The feature enhances the communication experience through personalized speech recognition and text-to-speech functionalities.

Primarily intended for people with acquired or progressive conditions that affect speech, such as cerebral palsy, amyotrophic lateral sclerosis (ALS), or stroke, the feature leverages artificial intelligence to improve speech recognition for a wide range of users.

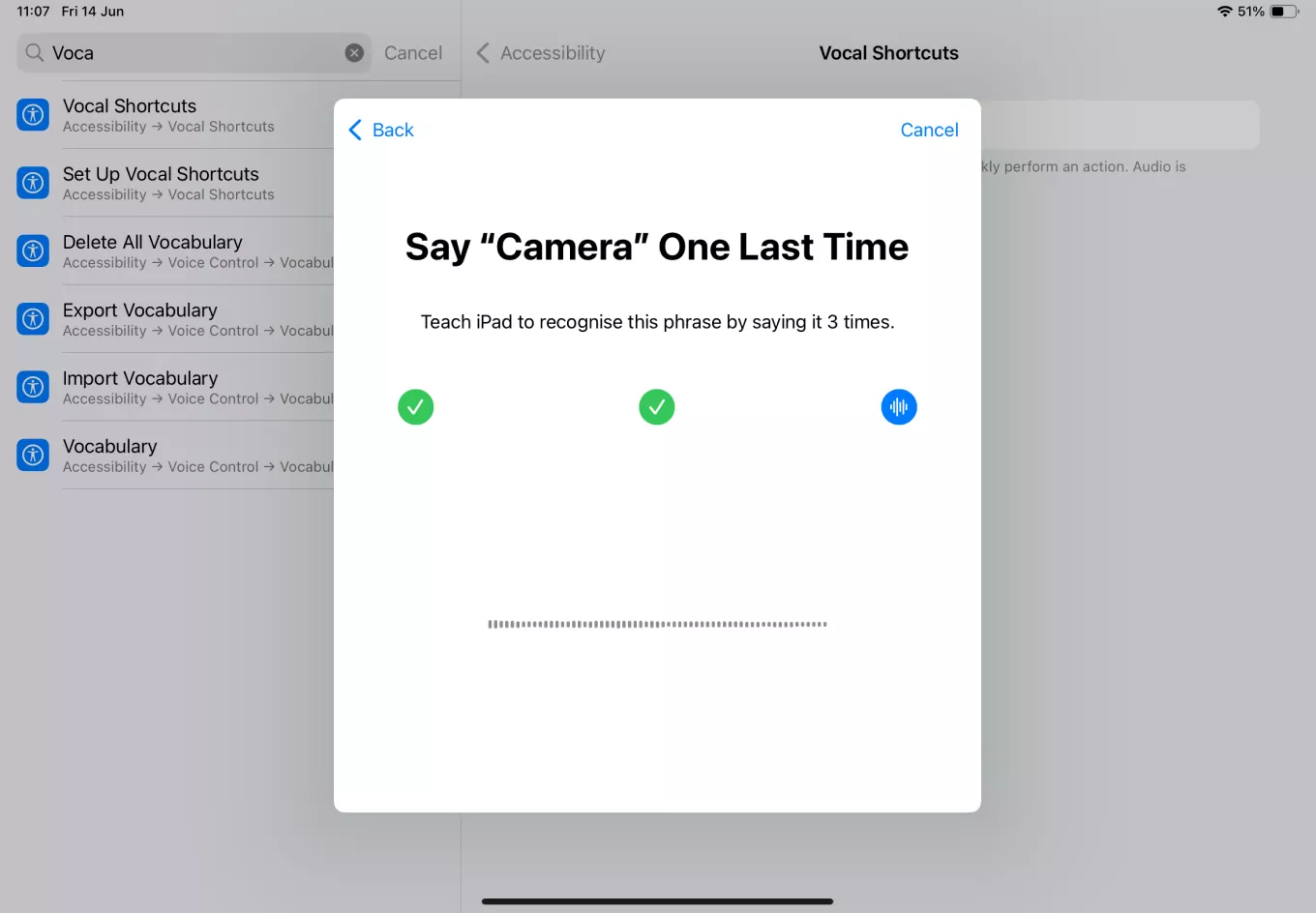

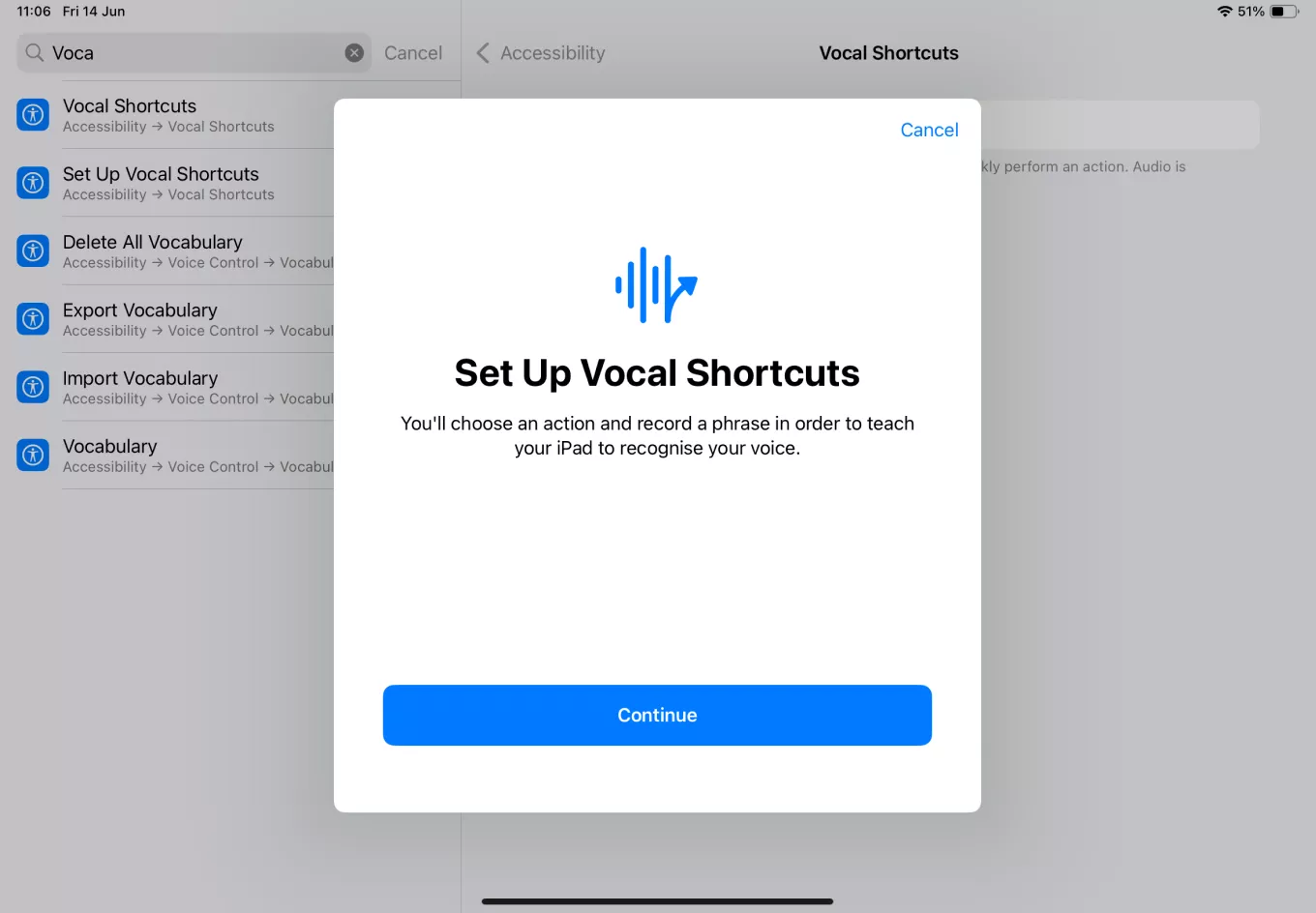

In close connection with this, Vocal Shortcuts allows users to perform hands-free actions by using custom voice commands, making the process easier and more efficient.

This is a perfect example of how an accessibility feature can prove useful not only to people with disabilities but to a far wider group of users. Performing specific actions using voice controls can be very helpful in a number of professional environments, such as medical institutions, car repair shops, or manufacturing facilities, or simply in everyday situations when our hands are occupied, like when we’re cooking or driving.

Vehicle Motion Cues

Motion sickness is usually caused by a conflict between what we see and what we feel, which can prevent people from using devices in a moving vehicle.

The Vehicle Motion Cues feature can be turned on in the Control Center, and the device’s screen will display a set of dots on the edges, animated in sync with the vehicle’s motion. Using on-device sensors, this feature recognizes when a user is in a moving vehicle and responds to its movement accordingly.

Even though I can’t quite remember the last time I felt motion sickness (and if we decide to ignore the fact that Apple is helping us stay glued to our phones even during road trips), this is a well-functioning feature that has the potential to help many people.

CarPlay

If you own a vehicle that supports CarPlay, you’ll be able to use Voice Control to navigate the user interface and manage apps hands-free by using your voice. In addition, CarPlay now supports Sound Recognition, which alerts drivers and passengers who are deaf or have hearing difficulties to important auditory cues such as honking cars, sirens, and other noises.

Colorblind users will benefit from Color Filters, which are introduced to the CarPlay interface along with other visual accessibility features like Bold Text and Large Text, improving the UI’s readability and usability.

Other accessibility enhancements

Aside from introducing new accessibility features in iOS 18, Apple announced a number of useful updates to some of the existing ones.

VoiceOver

Users who are blind or have low vision will benefit from new voices, a flexible Voice Rotor, customizable volume control, and the ability to customize keyboard shortcuts on a Mac.

Magnifier

Introduces a new Reader Mode and easier access to Detection Mode using the Action button.

Braille

Faster Braille Screen Input, Japanese language support, multi-line braille with Dot Pad, and the option to choose different input and output labels.

Switch Control

The feature will enable the users to use the cameras on their iPhones and iPads to recognize finger-tap gestures as switches.

Hover Typing

Users with low vision will be able to display larger text in preferred fonts and colors when typing in text fields.

Personal Voice

Originally introduced in iOS 17, the feature allows users who have difficulty pronouncing or reading full sentences to create a Personal Voice using shortened phrases, and it will now be available in Mandarin Chinese.

Discover Apple’s new accessibility features in iOS 18 this fall

Over the past 15 years, Apple has introduced continuous innovation in the accessibility space, designing a variety of features that benefit all users, but especially those who rely on them the most. Now, leveraging the power of artificial intelligence for new accessibility features in iOS 18, Apple is unlocking new ways of interaction with their devices, making them even more intuitive and impactful.

With Eye Tracking and Music Haptics at the forefront and a heap of accessibility features to boot, the iPhone strengthens its position as the industry leader in accessibility. Most of these features will be available with the release of iOS 18 and iPadOS 18 this fall, promising an even more inclusive and user-friendly experience for everyone.