Whether it’s live or remote, synchronous or not, a design review makes sure your carefully envisioned designs make it to implementation safely. We take you through the process and share tips on how to make the most of them.

As a designer, you hand off your work to the development team, and they move on to implementing your designs. Can you close Figma and call it a day? Not if you want to make sure what you’ve created is executed correctly and meets the outlined requirements and specifications.

This is where a design implementation review comes in.

Design implementation reviews, or just design reviews in short, help bridge the gap between designers, developers, and software testers by keeping everyone on the same page. Essential to the design process, they help ensure your carefully envisioned designs are implemented precisely as they should be.

What is a design review?

A design implementation review evaluates the current state of a project’s implementation and is usually performed at the beginning of the development process.

The golden rule is: review early and often. When potential bugs and UI issues are identified early in the process, it reduces the chance of major problems going unnoticed until later stages of development.

Moreover, it is far more cost-effective to make changes early on than once the product has already been built or has reached an advanced stage of development. Not to mention, reviews and feedback at any stage of the digital product development process are both useful and important for the product’s success.

At Infinum, we build a wide range of digital products, including IoT, banking, and telecom apps, as well as complex websites and management systems. Talking to my designer colleagues about the design review processes we use on our projects, I learned that while there are some similarities, we approach certain things differently.

We concluded that there is no one-size-fits-all approach to design implementation reviews and that the process you use should be adapted to the specific needs of your project. Based on our experiences, however, we identified some best practices that have proven to be effective.

How often should you do design reviews?

The frequency of design implementation reviews can vary depending on the project’s complexity, timeline, and the team’s workflow.

Employing regular check-ins throughout the design and development process is good practice. They can be scheduled based on project milestones, e.g., following the initial design phase, after some specific features are developed, or when new components are completed.

In Agile development methodologies like Scrum, design implementation reviews are often integrated into the sprint cycles. Designers, developers, and software testers typically get together to conduct a design implementation review at the end of each sprint. They can also be integrated into individual user stories or incorporated as part of the Definition of Done (DoD) for specific frontend tasks.

Synchronous design reviews – live or remote

As the name says, synchronous design implementation reviews are those where everyone involved is present at the same time. The project’s designer, developer (at least one per platform), and software tester get together and pull the latest build to compare it against the design documented in Figma.

Back in the pre-pandemic days, live reviews at the office were the usual way of going about it. Nowadays, everybody has grown accustomed to remote reviews over Zoom or Google Meet. However, whether they’re taking place live or remotely, the jist is the same – designers or developers walk everyone through the design or demonstrate how it’s being implemented.

Just like everything in life, synchronous design reviews come with several advantages and disadvantages, depending on how they are conducted and the specific context of the project.

Pros

Immediate feedback

With synchronous design reviews, feedback is available immediately, which helps to identify and address issues promptly and reduces the chances of a misunderstanding.

Faster decision-making

Synchronous reviews provide clarity, ensuring that everyone understands the design vision and the requirements, which helps you make quicker decisions about design changes or improvements.

Minimized risk of misinterpretation

Compared to asynchronous feedback methods, they minimize the risk of miscommunication or misinterpretation.

Live-fixing minor issues

Sometimes, minor issues can be resolved during a meeting or a call. If we notice a small detail that can be easily fixed, we can take care of it immediately instead of creating a task and addressing it later.

Improved relationship between design and development

Reviewing the design implementation together regularly can help improve the relationship between design and development by fostering a better understanding.

Cons

Time-consuming

Design reviews can be quite time-consuming, especially on complex projects.

Scheduling bumps

Scheduling meetings can also pose a challenge in itself and lead to delays. Having a designated time slot is the key for minimizing scheduling bumps with synchronous design reviews.

The pressure of on-the-spot feedback

Sometimes, the real-time nature of live or remote design reviews can create pressure. Some team members may be uncomfortable with on-the-spot feedback or live-fixing bugs.

Pro-tip for synchronous design reviews: Regardless of whether reviews are conducted live or remotely, it is good practice to determine who is responsible for taking notes during the meeting. This ensures that nothing important is missed and makes it easier to assign tasks for development fixes after the meeting.

Asynchronous design reviews

There are times when scheduling live or remote reviews can be a hassle. Asynchronous design implementation reviews offer a way to review and give feedback on a design or its implementation without everyone having to be available at the same time. This can be especially convenient for distributed teams across different time zones or when stakeholders need more time for a comprehensive evaluation.

Providing or receiving feedback in your own time, at your own pace, sounds a lot less scary than doing it in real time. That is part of the reason why this approach is commonly used not only with fully remote teams but also with hybrid ones. However, there are some disadvantages to keep in mind as well.

Pros

Saving time and effort

By eliminating the need to coordinate meeting times, a lot of time and effort is saved.

Flexibility

The designer can provide feedback on their own schedule, accommodating different time zones and work hours of the development team.

Written feedback

Written feedback provides a clear and permanent record of the review process.

Iteration

Asynchronous reviews can support multiple rounds of feedback as needed, allowing for iterative improvement. This way, developers and software testers have more time to provide feedback and respond without feeling pressured, and they can take tasks at their own pace.

Cons

Issue resolution time

Asynchronous reviews may lead to delays in issue resolution since participants are not available simultaneously to discuss and address concerns in real-time.

Potential for miscommunication

Since there is no opportunity for immediate clarification or real-time discussion of feedback points, there is a higher risk of miscommunication or misinterpretation of the written feedback.

Reduced collaboration

Without real-time communication, there’s less dynamic interaction among team members. Collaborative problem-solving and spontaneous discussions are often more challenging in an asynchronous setup.

Having gone through all the pros and cons, we should note that there is no right or wrong way of going about design implementation reviews – it’s best to adapt the process based on the project’s requirements. For some features, live and remote reviews work better, while for others, we are able to review the implementation thoroughly working asynchronously.

How to make the most of asynchronous design reviews

To help make your async reviews more efficient, collaborative, and conducive to producing better outcomes, I’ll share the practices we’ve identified as useful through our years of experience in project work.

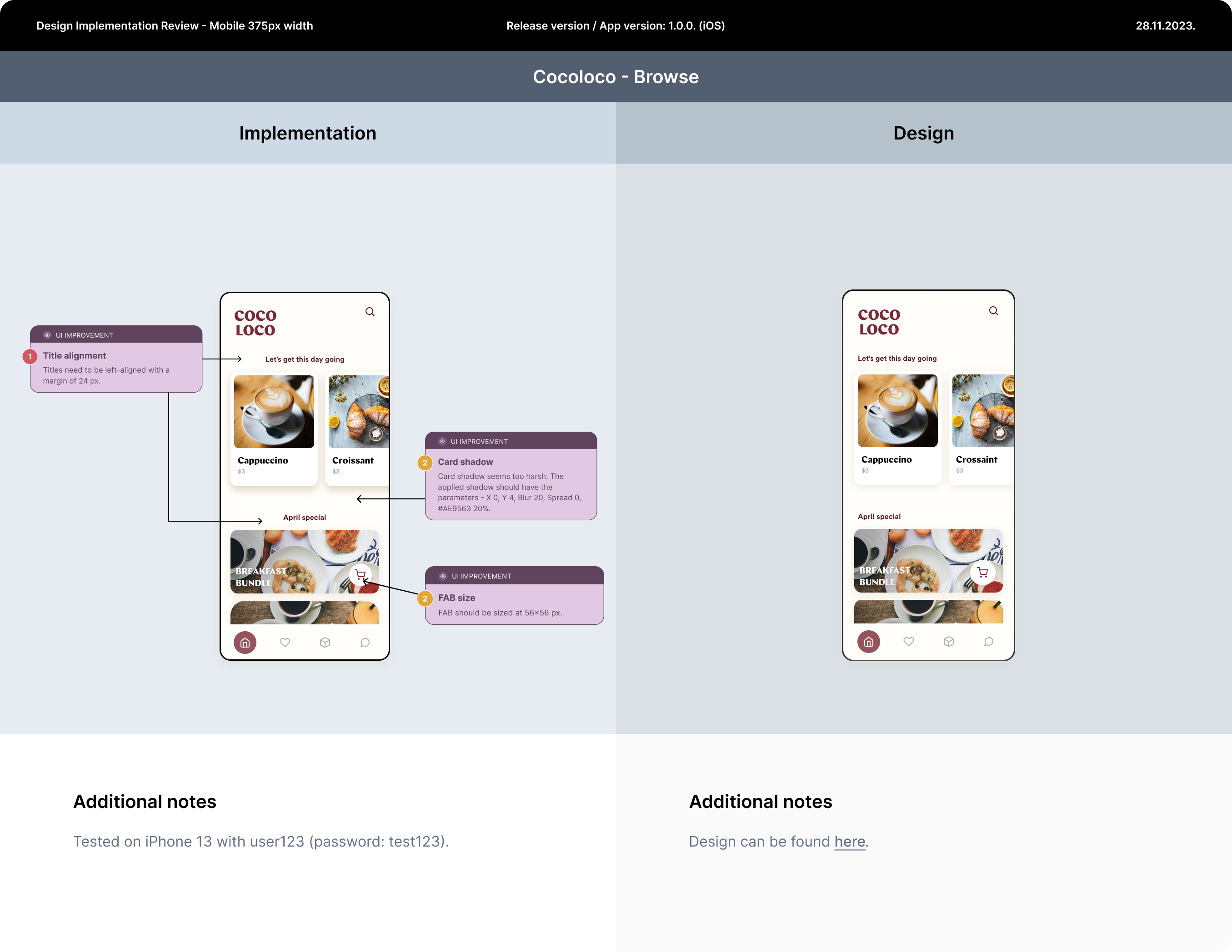

A common method employed for asynchronous reviews is for designers to compare implementation screenshots with the original designs from their preferred design tool, such as Figma or Sketch. Here are a couple of general tips that will help you on your way:

- Before starting the review, specify what build you were using. You want to make sure you have the latest build installed on your testing device, as you don’t want to test bugs and issues that have already been fixed.

- Make sure to take screenshots while going through flows. Alternatively, you can screen-record everything and then screenshot specific frames.

- If you’re doing a design implementation review for mobile, make sure to compare the implementation on iOS and Android.

To make the whole process simpler, we have created a template for asynchronous design implementation reviews – ready to be used on your next review.

Further, when we do reviews, we find it best to categorize issues and fixes into three groups: things that must be fixed, things that should be fixed, and “nice-to-haves”. This categorization helps developers prioritize tasks and fix critical issues first.

- “Must fix” issues typically relate to critical UX or UI issues that are likely to disrupt the user experience.

- “Should fix” issues usually relate to UI issues caused by a slight deviation from the original design during implementation.

- “Nice to haves” relate to improvements or fixes that are not critical or essential, but can enhance the quality, usability, or performance of the whole product.

Make sure to document all the necessary changes with as much detail and clarity as possible so developers can understand exactly what needs to be improved. Your design review documentation can include notes next to the screenshots you have taken, as well as more detailed information in the appropriate place.

After annotating the implementation and design screens, you will need to align with the developers and testers about task handling, development fixing, and further testing.

When you’re designating a task for the development team after a review, make sure to link the Figma file where they can find your design review documentation. For the purposes of better file organization and clarity, it is better to have a separate Figma file just for design implementation reviews. When the feedback is received, there may be a follow-up meeting to discuss and clarify individual points and make decisions based on project needs or technical limitations.

Checking implementation during testing phases

A helpful practice I inherited from a colleague of mine is conducting “mini design implementation reviews” during the testing phases.

As our software testers are testing flows and functionalities, they are either screen-recording everything or taking screenshots and attaching them while creating tasks for the development team. Whenever they address some issues in those tasks, I take a look at the provided screenshots or videos and sometimes catch some UI issues that also need fixing.

“Mini design reviews” can be a great addition to your usual review practice, but keep in mind that they cannot replace the benefits and impact of a comprehensive review.

Review as you see fit

With design implementation reviews, there is no “one size fits all” solution. You should always choose an approach that fits your project’s unique characteristics and requirements.

Moreover, there’s no need to stick with only one approach. You can combine synchronous and asynchronous design implementation reviews to take advantage of their benefits in different project phases. Here, flexibility is key, and you can adapt your review approach as the project progresses and based on feedback from team members.