We tested out the abilities of new LLM and AI tools and examined whether Chat GPT’s GPT-3.5 Turbo model can build a Flutter app from scratch – and learned something about using AI in development on the way.

With new LLM and AI tools like ChatGPT and Github Copilot proving themselves helpful in more and more use cases, using AI assistants in development is gaining ground. Generated code can be pretty helpful…most of the time.

This situation got me wondering – if AI tools can generate useful code snippets, could they generate a whole file? Or a project? Some development-focused agents like smol-developer can build a web application.

Wanting to find out just how far these new LLM tools can take us, I decided to examine if they can build a Flutter application from scratch.

Building an AI Developer for Flutter in Flutter

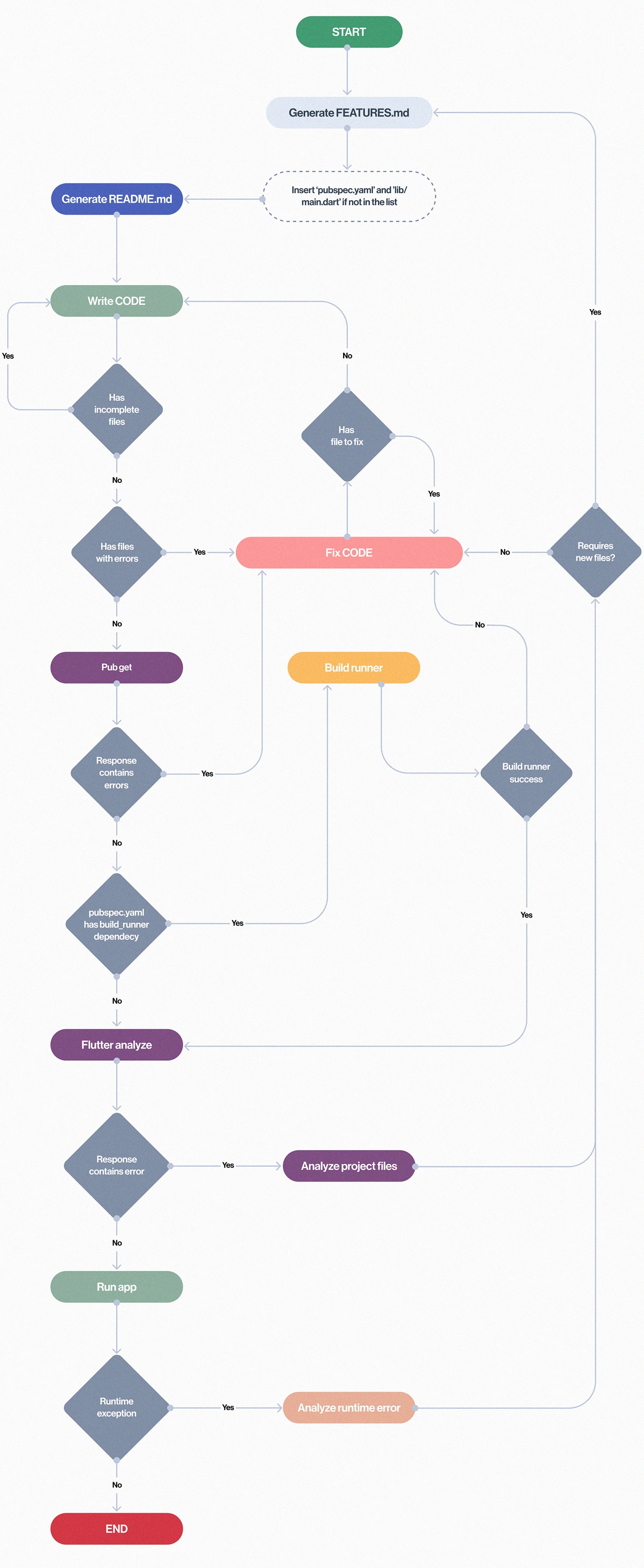

As a Flutter developer, naturally, I decided to try and create a Flutter application that can build and run other Flutter applications. The idea was to come up with a workflow that simple agents could follow and eventually build an application. Using the agents, we’ll have to parse the responses we get and then utilize them further.

The workflow would go like this:

1

Generate project documentation

2

Use the generated results with other agents

When the Write code agent finishes its task, we just take the whole response and save it as some_name.dart, depending on the file name of course. Repeat this for every file.

3

When all the files are complete, run flutter analyze and flutter build on the project

If any errors occur, we use agents that get the current project documentation, along with the error message. This type of agent will decide what needs to be fixed.

Fixing code works almost the same as writing code with an agent. The only difference is that in this case, we also provide the old code for reference, together with the error messages and the messages from the Analyze agent. I should note that in some parts of this process, I simply relied on AI to work and do the right thing.

Disclaimer: this experiment was done prior to OpenAI introducing function calling to their API. While functions would certainly work better here, my implementation was still good enough that refactoring to functions wouldn’t bring much extra value. I used OpenAI’s cheapest model, GPT-3.5 Turbo. Note that this comes with a limit of just 4k tokens, which becomes really limiting when you need to describe the whole application and its architecture to create the file.

Let’s get some agents on the task

They may sound fancy, but agents are basically just hard-coded prompts you can feed into an LLM, and then expect and assume that it will generate the desired response. Since agents perform best on simple tasks, we will need to really break down the application-writing workflow and help the agents however we can.

In this case, the desired result is a Flutter application that can build another Flutter application from a short description. There’s no need to write the whole requirements file; it can do that by itself. The requirements should come down to one simple prompt, based on which it should be able to build the application. For that to work, we will need a few agents that we can chain.

Generate features agent

Generates the separation of concerns, decides on state management, generates the file tree

Generate readme agent

Generates the readme file with the app’s specification

Generates the hierarchy for each file

Write code agent

Goes over each file, writes code for it

Fix code agent

Fixes errors in the existing code, if any

Analyze project files agent

If we get an error from flutter analyze, it assesses which files need to be fixed

Analyze runtime error agent

In case of a runtime error, it assess it and tells us what files need to be fixed

As you can see, these are quite a few agents. We use three separate ones only for writing code in one way or another. However, we need all three of them since any change in the prompt requires a new agent.

Generate features and readme agents

This workflow makes sure that the first features agent keeps track of our files and the architecture that we will use. From that, we will generate a more detailed readme file with the desired class hierarchy.

These two files are the most important ones because other agents will use them in their prompts in some way. We do this to keep our files consistent. This way, an agent knows what other files are supposed to look like without us actually providing it with the whole code files, which would use up our 4k token limit very fast.

Write code agent

When the documentation is complete, we can start with the code. We call the Write code agent for each file separately and have it write the code for that specific file. When we have the code for each file, we check if there is a build_runner dependency. If there is one, we run the build_runner command to generate any files that we need. After that, we use the flutter analyze command. If we get any errors with it, another agent is started so it can tell us what we need to fix or if any files need to be added.

Fix code

If we encounter any errors, we employ agents that can fix the code. The Analyze project files and Analyze runtime error agents can mark the files that need to be fixed. They also add small messages about the error and what needs to be fixed in the file.

In our example, we provided the whole code file as well. This makes sure that the agent does not forget to include a part of the code that we need.

Run the application

If flutter analyze didn’t find any issues, we can try and start the app. By running the flutter run command, we start the app and listen to its output. If there are any errors, the Analyze runtime error agent is run to try and fix the issue with the app running.

The final result – success!

Finally, ChatGPT managed to build the application based on my short prompt. However, the process wasn’t trivial, and sometimes it would get stuck in a “fixing loop”, desperately wanting to fix the wrong file, which resulted in the application crashing.

It took a few tries, but in the end, I did get the app that I wanted. To make it as simple as possible, I had to significantly reduce the app’s scope and just give up on some of the things I planned. The app had a lot of issues with navigation between different screens, as well as parsing models from the API, since they usually require consistency between the files.

Can ChatGPT really build Flutter apps? Limitations apply

As this experiment proves, agents seem to work on smaller applications and can even fix the code they generated earlier. However, handling larger and more complex applications could prove problematic. The contextual knowledge needed to build such apps may be too expansive to fit in the token limit.

The AI tool should also take into account all the utilities and existing files and implementations. Otherwise, we can easily end up with multiple implementations of the same feature in multiple places, or changes done in one file breaking other files. Also, sometimes we work on more complex features that need to use multiple files in very specific ways, and AI could easily make mistakes here.

Another important thing to note is that these types of AI tools are not constantly trained on new updates, so they will inevitably use old or even depreciated code. This is especially true for Flutter since both Flutter and Dart have seen substantial changes in the last few years.

AI – Terrible lead engineer, great assistant

AI in development certainly has a case. Actually, many potential use cases. However, for now, they are probably best kept within the confines of the assistant role. That way, when they give outdated suggestions or just plain weird ones, the human eye can still easily dismiss them, and just stick to the useful parts.

A good example would be a partial automation of the code review process, where AI could start the review as soon as the code is pushed. That way, pieces of code would get flagged and marked as high priority for human reviewers. Ideally, this would require some contextual understanding, but should still work since AI wouldn’t actually be writing or modifying the code.

Further, every application requires performing some repetitive and not particularly challenging tasks. Normally, these are hard to automate because there are always newer versions that do something slightly differently. With AI, we can streamline this process.

By feeding AI tools all the information we have and giving it the latest documentation, we can easily automate repetitive tasks.

What’s more, local models are also becoming more capable and some can even be run on phones. While they are still behind GPT-3.5, they are not that far behind – wIth further updates and development, they will be able to achieve GPT-3.5 results. This will allow us to implement a local model to ensure privacy of any sensitive data.

ChatGPT still has a long way to go to start building Flutter apps

As we’ve seen in this experiment, AI becoming the sole developer of an application is still a long way off. It struggles with building more complex apps, which is practically any app that includes more than one feature at this point. It is also doomed to always use outdated libraries and code.

However, while it may not be able to write code independently, it is already proving to be a very useful assistant. It can speed up development when used to implement some simple features, write boilerplate code, or perform repetitive tasks.

We should also be aware that these tools are improving their performance by the minute, and there is no doubt they will hold an important place in the future of development.

If you are interested in utilizing AI in your digital product, see how we can help you on the way.