Apple Vision Pro impressions are everywhere. If you want to step back from the usual user reviews and see the big 3D picture, read what a product strategist, a designer, an iOS and an Android developer have to say about Apple’s trending headset.

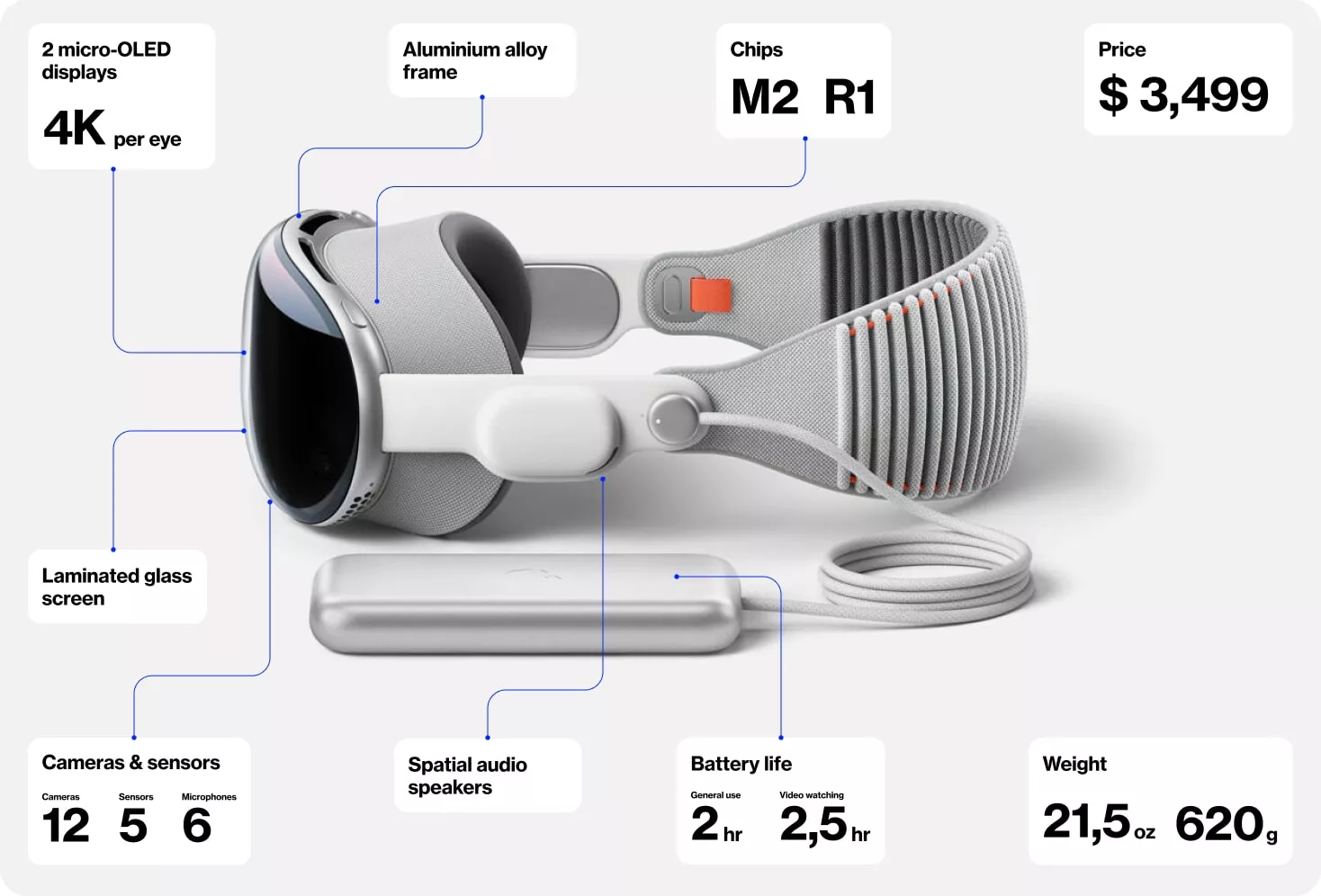

Launched on February 2, 2024, Apple Vision Pro is the Cupertino tech giant’s first venture into a completely new product category since the Apple Watch in 2015. The headset is finally out, and so are a gazillion user reviews.

As a digital agency with almost two decades of experience covering the full digital product development process, including web, cloud, and mobile app development services, we have no shortage of tech enthusiasts willing to examine the wider implications of Apple Vision Pro.

What does it mean for the extended reality (XR) landscape?

What opportunities does it open up, and what challenges does it bring?

Should you be thinking about building digital experiences for this futuristic interface?

Here’s what our experts had to say on the matter.

Apple Vision Pro impressions – from a product, design, and development perspective

Featuring:

Chris Bradshaw, Product Strategy Director

The Workshopper Pro who has personally been the secret ingredient behind many innovative product market launches. He has the answer to any product question, and that answer is “Strategy first!”

Ana Krapec, Designer

UX/UI designer who creates user-friendly retail execution apps and killer B2B platforms in her work time and is highly curious about the potential of AR/VR/XR technologies at all times.

Goran Brlas, Product Architect & Lead iOS Engineer

Seven years of experience in developing, leading, and architecting, and twice as much in being an Apple fan. He didn’t see the promise of extended reality until last summer Tim Cook said, “But we do have one more thing…”

Renato Turić, Android Team Director

Our Android wizard with nine years of development experience under his belt, AR/VR apps for Google Glass included. When asked to choose between Apple and Meta, says to keep an eye on Microsoft and Google.

Chris, Product Strategist: “Apple is playing the long game”

What market opportunities does Apple Vision Pro create for businesses looking to innovate in the field of extended reality? What are the challenges?

The benefits of this innovation are extensive. It has the potential to improve various experiences – from learning, industrial design and prototyping to remote collaboration. But with each benefit comes a potential risk.

For example, if we visualize products for purchase in a virtual environment, we need to ensure their accurate representation and reconcile the potential discrepancies between the physical and the virtual world.

We also need to take into account that with the Vision Pro, there are no ports for sharing content with non-Apple users. Apple TV is the default media management system where things like YouTube apps don’t exist. When building for the headset, be prepared to play in the Apple ecosystem.

Are there specific sectors or industries where you see the Vision Pro having a significant impact?

Industries I see as best positioned to realize immediate value are:

- Healthcare: apps can be used for medical training, patient education, and therapeutic sessions.

- Education: enriching traditional experiences with immersive educational content.

- Retail: creating a digital experience that mirrors the physical retail environment so that customers can explore products and procure from the comfort of their homes.

- Industrial Design and Prototyping: we could expedite and simplify these processes by enabling engineers and designers to collaborate in virtual environments.

Do you think the visionOS marketplace will be crowded, or will it will flop like the ones for Apple Watch and Apple TV?

This approach will only be effective if there are highly beneficial functions for specific tasks that outperform other devices. The Vision Pro is designed to eventually replace your computer, but it still has a long way to go before it reaches mass market adoption.

I believe Apple’s strategy is to play the long game. While Meta offers a lower-cost product for mass entry, Apple employs superior technology at a higher cost to build a platform progressively. The idea is to encourage the market to follow their lead – and they’ve done this successfully in the past.

CHRIS BRADSHAW, PRODUCT STRATEGY DIRECTOR

Should businesses be thinking about integrating Apple Vision Pro into their product strategy?

Even in its early days, Apple Vision Pro already opened up a lot of possibilities with the immersive nature of its experiences. However, businesses shouldn’t be too quick to jump on the bandwagon just because something is popular.

A strategic plan should always come first to make sure:

- The tech is an asset for the business and has a clear purpose

- We know how we will measure success

- We create a roadmap for monitoring and improvement to know what to do with the data as the results come in

When companies just blindly follow a tech trend, this is when we see time, money, and resources wasted.

Ana, Designer: “The infinite canvas calls for a perspective shift”

What innovative interaction models have you seen or would suggest for enhancing user engagement on Apple Vision Pro?

Personally, I’d love to see the integration of gamification and interactive learning features. They could make learning fun and keep users engaged, which is super important for overall enjoyment and involvement.

The Vision Pro could incorporate object recognition so users can interact with the real world. It would be great for learning or shopping experiences – you get content related to the objects you encounter.

Another suggestion would be to add feedback mechanisms like tactile responses to virtual interactions to make the experience more tangible. And what about adaptive environments? The virtual spaces could adjust dynamically based on user actions or preferences for a highly personalized experience.

Based on your impressions of Apple Vision Pro, what are the most significant challenges designers will face when designing for an extended reality environment?

We will be designing for a completely new paradigm, and it will be interesting to observe how interaction standards evolve and whether Apple’s design pattern will become the standard.

First, we will need to make a shift from designing for fixed screens to creating experiences for a boundless 3D space. We need to learn how eye movements and gesture control work to be able to shape interaction in those environments.

It’s also important to think about passthrough. People can “see” through the display, and designers need to use this feature in a way that it enhances the experience, not disrupts it.

When we are creating immersive experiences, we need to strike a delicate balance. We want them to enrich basic experiences like learning or reading a book rather than complicate them.

ANA KRAPEC, DESIGNER

We should also be mindful of motion sickness. We want to minimize discomfort, especially for users prone to this, like those who struggle with FPS games. Spatial orientation is another challenge – users need to navigate through the infinite canvas without feeling overwhelmed.

How well does Apple Vision Pro address accessibility and inclusivity in its design?

The Vision Pro does show a commitment to accessibility and inclusivity. For example, adjusting for vision impairments like low sharpness and focus issues, including features like larger text and the ability to ignore rapid eye movements.

It also tackles accessibility for blind people through the implementation of VoiceOver, a screen reader feature that provides spoken descriptions.

How do you see the field of XR design evolving over the next few years?

There will likely be much more emphasis on accessibility and inclusivity, with features like voice control and alternative text descriptions making experiences more accessible to people with disabilities.

I expect that the interaction between the physical and digital worlds will become even more seamless and natural, and their boundaries more blurred.

The experiences will be enhanced by using environmental data, and the 3D graphics and immersive video capabilities will improve further.

Devices like the Vision Pro will likely become more available and widespread, and we’ll have scenarios like watching Netflix through our mixed reality headsets. We could see films or concerts produced specifically for this type of experience.

Goran, Lead iOS Engineer: “Immersive 3D experiences for the win”

How does the app development process differ when you’re dealing with a mixed reality environment rather than traditional screen-based apps? Especially in terms of user experience and interface design.

In the screen-based development that we’re used to, apps are designed for a flat, 2D space. Even without the immersive 3D experience, such window-based apps are still valid and can find their place on Apple Vision Pro, especially now that everything is new and developers are still getting their footing.

But if we want to get the most out of the device and offer something unique, we need to make a fundamental shift in user experience and interface design, focusing on 3D objects, spatial interaction, and environmental awareness.

How well does Apple Vision Pro integrate with the existing iOS ecosystem, the iPhone, iPad, and Mac?

As expected, the Vision Pro continues the trend of seamless integration within the Apple ecosystem.

One of the main strengths of Apple’s walled garden is that devices work great together. Continuity features, shared iCloud services, and compatible apps are all there from the get-go. It’s a big plus for initial adoption.

I found it really cool that if you look at your Mac’s screen while wearing the headset, a small pop-up will appear, prompting you to expand your Mac screen into the virtual space. Then you can resize and move it around.

It’s interesting that starting with the iPhone 15 Pro, you can shoot spatial videos to be watched on the Vision Pro. This is great, but it also raises a question. If I don’t have the device right now, should I still shoot them in the spatial format for later?

What are the provided SDKs and development tools for visionOS like? Are there any new tools or features that developers should be excited about?

The tools extend Apple’s existing developer ecosystem, incorporating ARKit, RealityKit, and SwiftUI for augmented and virtual reality, along with new APIs tailored for mixed reality experiences.

I wrote about ARKit five years ago, before it was cool, and these advancements are making it even more exciting. In hindsight, Apple has been preparing us for something like a headset, even though the AR experiences of the time were mostly intended for iPhone and iPad games.

Using the provided APIs differs from the traditional iOS/macOS app development process. We are no longer dealing with screens and views but 3D objects and scenes. This is much more similar to game development and will require a shift in our mindset.

GORAN BRLAS, PRODUCT ARCHITECT & LEAD iOS ENGINEER

Still, Apple didn’t ignore the development experience and made sure everything was intuitive and easy to grasp for existing Apple platform developers.

What types of apps do you expect we’ll be seeing on the Vision Pro?

The use cases will likely include immersive audiovisual environments, gaming, and educational apps. Apple is promoting Vision Pro as a spatial computing device, but in its current iteration, it’s more suitable for consuming than creating content, especially with the somewhat clumsy and slow user input.

I recently saw a great example of this use case. I’m a Formula 1 fan, and this concept of a spatial F1 broadcast stood out as an excellent innovation and a type of experience I’d like to see come to life.

How is the developer community responding to the Vision Pro so far?

The developer community interest seems to be rather strong, especially since this is Apple’s first XR offering. The SDKs and APIs are familiar to those who’ve developed for Apple’s platforms before, and having a lot of eager early adopters will certainly help with building hype for the development experience.

Who do you think will win the VR battle, Meta or Apple?

This is a tough one. Meta was at the forefront of VR and has accumulated a lead in this domain. Quest has been out for some time, gaining valuable user feedback and improving in each iteration. However, Meta is mostly focused on gaming and not the user experience and integration with other devices.

Apple went straight for the premium market, relying on being the best in class and the existing ecosystem of compatible apps to kickstart the platform.

We still have to see if the spatial computer vision will pan out or if we will be left with an expensive, albeit great, virtual movie theater.

I’m biased, so I’d like to see Apple “win” and deliver on its promises. That being said, competition in any space is always good, and ultimately, the consumers should be the real winners here.

Renato, Android Team Director: “Google is still in the VR game”

Who do you see as the primary target audience for the Vision Pro?

The word “Pro” suggests that Apple is targeting industry professionals. Many imagine people walking the streets with the headset, but such a heavy device with an external battery is clearly not designed for that.

The Vision Pro truly shines in specific workflows when you leverage the power of the Apple ecosystem. While it can be used as a standalone device, it unleashes its full potential when you use it with a Mac, for example.

Mirroring your screens in the development process, for example, debugging some issues on multiple screens around you, sounds very refreshing and productive. This works for other industries, too, like video and audio editing, industry 4.0, education, health, etc.

How well does Apple Vision Pro integrate with Android devices and services?

The fact that you have to have an iPhone to scan your face in order to buy Apple Vision Pro says a lot about the exclusivity of the current setup. So, no luck there for the Android developer community, at least for now.

Android and Apple Vision Pro are not a good match. There seems to be no practical scenario where an Android device would be effectively used alongside it, if at all.

RENATO TURIĆ, ANDROID TEAM DIRECTOR

Are there any tools, libraries, or resources available for Android developers interested in the visionOS development environment? How accessible are these resources for them?

For native Android developers looking for development opportunities on the Vision Pro, the resources are non-existent. Nonetheless, for those with cross-platform development experience, utilizing frameworks such as React Native or Flutter presents a fair chance for developing apps for Apple Vision Pro.

On the other hand, should JetBrains update their Compose Multiplatform framework to support visionOS, that would definitely broaden the development landscape. This is mostly because the development environment for creating VisionOS apps would consist of Kotlin and Compose, something the majority of Android developers are already familiar with. That would be cool.

Where is Google in the XR game? Can we expect it to launch its own product to rival Quest and Vision Pro?

Google has been in the game much longer than most people are aware of. Just remember the advent of Google Glass in 2013 and Google Daydream in 2016.

Since I have experience developing VR/AR apps for Google Glass back in the day, I can say it definitely showed some promise. However, the hardware was just not ready for any serious app use case because of battery drainage and the device’s overheating issues.

Both devices ended up being discontinued, but the hype was real back then.

Recent news now suggests that Google, together with Samsung and Qualcomm, is working on a mixed reality platform. Keeping that in mind, along with Google’s presence in the AI world today, it will be an interesting year.

Who do you think will win the VR battle, Meta or Apple?

It’s hard to tell. Meta is a step forward, in my opinion. Their device is much more affordable and lightweight and has many more apps available. They already have a large and diverse user base that includes professionals, casual users, and gamers.

However, Apple is Apple, and they usually know what they are doing. We also shouldn’t forget about Microsoft with their HoloLens and the announced Google/Samsung/Qualcomm collaboration. If they get their strategy right, it’s going to be a very interesting and competitive year.

What’s your brand’s vision for Apple Vision Pro?

While mass adoption might still be some time away, Apple once again introduced an innovation that will leave a mark on the tech landscape.

Apple Vision Pro sets a new standard in extended reality, requiring us to…well, think different about our approach to both design and development. As we await to see how the other contenders for the XR space will respond, it’s a perfect time to start thinking about the next generation of apps – spatial, immersive, and offering a spectrum of new possibilities. We’d love to help you with that.